使用 AppSignal 監控 Python Django 應用程式的效能

當我們觀察到緩慢的系統時,我們的第一個直覺可能是將其標記為失敗。這種假設很普遍,並強調了一個基本事實:效能是應用程式的成熟度和生產準備程度的代名詞。

在 Web 應用程式中,毫秒可以決定使用者互動的成功或失敗,因此風險非常高。性能不僅僅是技術標桿,更是用戶滿意度和營運效率的基石。

效能封裝了系統在不同工作負載下的回應能力,透過 CPU 和記憶體利用率、回應時間、可擴充性和吞吐量等指標進行量化。

在本文中,我們將探討 AppSignal 如何監控和增強 Django 應用程式的效能。

讓我們開始吧!

Django 效能監控要點

在 Django 應用程式中實現最佳效能涉及多方面的方法。這意味著開發能夠高效運行並在擴展時保持效率的應用程式。關鍵指標在過程中至關重要,可以提供切實的數據來指導我們的最佳化工作。讓我們探討其中一些指標。

效能監控的關鍵指標

- 回應時間:這也許是使用者體驗最直接的指標。它測量處理用戶請求和發迴回應所花費的時間。在 Django 應用程式中,資料庫查詢、視圖處理和中介軟體操作等因素會影響回應時間。

- 吞吐量:吞吐量是指您的應用程式在給定時間範圍內可以處理的請求數量。

- 錯誤率:錯誤(4xx 和 5xx HTTP 回應)的頻率可以指示程式碼、資料庫查詢或伺服器設定問題。透過監控錯誤率,您可以快速識別並修復可能會降低使用者體驗的問題。

- 資料庫效能指標:其中包含每個請求的查詢數量、查詢執行時間和資料庫連線的效率。

- 處理並髮用戶:當多個用戶同時存取您的 Django 應用程式時,能夠高效且無延遲地為所有用戶提供服務至關重要。

我們將建造什麼

在本文中,我們將建立一個基於 Django 的電子商務商店,為高流量事件做好準備,整合 AppSignal 來監控、最佳化並確保其在負載下無縫擴展。我們還將示範如何使用 AppSignal 增強現有應用程式以提高效能(在本例中為 Open edX 學習管理系統)。

項目設定

先決條件

要繼續操作,您需要:

- Python 3.12.2

- 支援 AppSignal 的作業系統

- AppSignal 帳號

- Django 基礎

準備專案

現在讓我們為我們的專案建立一個目錄並從 GitHub 克隆它。我們將安裝所有要求並運行遷移:

mkdir django-performance && cd django-performance python3.12 -m venv venv source venv/bin/activate git clone -b main https://github.com/amirtds/mystore cd mystore python3.12 -m pip install -r requirements.txt python3.12 manage.py migrate python3.12 manage.py runserver

現在訪問 127.0.0.1:8000。您應該會看到類似這樣的內容:

這個 Django 應用程式是一個簡單的電子商務商店,為用戶提供產品清單、詳細資訊和結帳頁面。成功複製並安裝應用程式後,使用 Django createsuperuser 管理命令建立超級使用者。

現在讓我們在應用程式中建立幾個產品。首先,我們將透過執行以下命令進入 Django 應用程式:

python3.12 manage.py shell

建立 3 個類別和 3 個產品:

from store.models import Category, Product

# Create categories

electronics = Category(name='Electronics', description='Gadgets and electronic devices.')

books = Category(name='Books', description='Read the world.')

clothing = Category(name='Clothing', description='Latest fashion and trends.')

# Save categories to the database

electronics.save()

books.save()

clothing.save()

# Now let's create new Products with slugs and image URLs

Product.objects.create(

category=electronics,

name='Smartphone',

description='Latest model with high-end specs.',

price=799.99,

stock=30,

available=True,

slug='smartphone',

image='products/iphone_14_pro_max.png'

)

Product.objects.create(

category=books,

name='Python Programming',

description='Learn Python programming with this comprehensive guide.',

price=39.99,

stock=50,

available=True,

slug='python-programming',

image='products/python_programming_book.png'

)

Product.objects.create(

category=clothing,

name='Jeans',

description='Comfortable and stylish jeans for everyday wear.',

price=49.99,

stock=20,

available=True,

slug='jeans',

image='products/jeans.png'

)

現在關閉 shell 並執行伺服器。您應該會看到類似以下內容:

安裝應用訊號

我們將在我們的專案中安裝 AppSignal 和 opentelemetry-instrumentation-django。

在安裝這些軟體套件之前,請使用您的憑證登入 AppSignal(您可以註冊 30 天免費試用)。選擇組織後,點選導覽列右上角的新增應用程式。選擇 Python 作為您的語言,您將收到一個push-api-key。

確保您的虛擬環境已啟動並執行以下命令:

python3.12 -m pip install appsignal==1.2.1 python3.12 -m appsignal install --push-api-key [YOU-KEY] python3.12 -m pip install opentelemetry-instrumentation-django==0.45b0

在 CLI 提示字元中提供應用程式名稱。安裝後,您應該會在專案中看到一個名為 __appsignal__.py 的新檔案。

Now let's create a new file called .env in the project root and add APPSIGNAL_PUSH_API_KEY=YOUR-KEY (remember to change the value to your actual key). Then, let's change the content of the __appsignal__.py file to the following:

# __appsignal__.py

import os

from appsignal import Appsignal

# Load environment variables from the .env file

from dotenv import load_dotenv

load_dotenv()

# Get APPSIGNAL_PUSH_API_KEY from environment

push_api_key = os.getenv('APPSIGNAL_PUSH_API_KEY')

appsignal = Appsignal(

active=True,

name="mystore",

push_api_key=os.getenv("APPSIGNAL_PUSH_API_KEY"),

)

Next, update the manage.py file to read like this:

# manage.py

#!/usr/bin/env python

"""Django's command-line utility for administrative tasks."""

import os

import sys

# import appsignal

from __appsignal__ import appsignal # new line

def main():

"""Run administrative tasks."""

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "mystore.settings")

# Start Appsignal

appsignal.start() # new line

try:

from django.core.management import execute_from_command_line

except ImportError as exc:

raise ImportError(

"Couldn't import Django. Are you sure it's installed and "

"available on your PYTHONPATH environment variable? Did you "

"forget to activate a virtual environment?"

) from exc

execute_from_command_line(sys.argv)

if __name__ == "__main__":

main()

We've imported AppSignal and started it using the configuration from __appsignal.py.

Please note that the changes we made to manage.py are for a development environment. In production, we should change wsgi.py or asgi.py. For more information, visit AppSignal's Django documentation.

Project Scenario: Optimizing for a New Year Sale and Monitoring Concurrent Users

As we approach the New Year sales on our Django-based e-commerce platform, we recall last year's challenges: increased traffic led to slow load times and even some downtime. This year, we aim to avoid these issues by thoroughly testing and optimizing our site beforehand. We'll use Locust to simulate user traffic and AppSignal to monitor our application's performance.

Creating a Locust Test for Simulated Traffic

First, we'll create a locustfile.py file that simulates simultaneous users navigating through critical parts of our site: the homepage, a product detail page, and the checkout page. This simulation helps us understand how our site performs under pressure.

Create the locustfile.py in the project root:

# locustfile.py

from locust import HttpUser, between, task

class WebsiteUser(HttpUser):

wait_time = between(1, 3) # Users wait 1-3 seconds between tasks

@task

def index_page(self):

self.client.get("/store/")

@task(3)

def view_product_detail(self):

self.client.get("/store/product/smartphone/")

@task(2)

def view_checkout_page(self):

self.client.get("/store/checkout/smartphone/")

In locustfile.py, users primarily visit the product detail page, followed by the checkout page, and occasionally return to the homepage. This pattern aims to mimic realistic user behavior during a sale.

Before running Locust, ensure you have a product with the smartphone slug in the Django app. If you don't, go to /admin/store/product/ and create one.

Defining Acceptable Response Times

Before we start, let's define what we consider an acceptable response time. For a smooth user experience, we aim for:

- Homepage and product detail pages: under 1 second.

- Checkout page: under 1.5 seconds (due to typically higher complexity).

These targets ensure users experience minimal delay, keeping their engagement high.

Conducting the Test and Monitoring Results

With our Locust test ready, we run it to simulate the 500 users and observe the results in real time. Here's how:

- Start the Locust test by running locust -f locustfile.py in your terminal, then open http://localhost:8089 to set up and start the simulation. Set the Number of Users to 500 and set the host to http://127.0.0.1:8000

- Monitor performance in both Locust's web interface and AppSignal. Locust shows us request rates and response times, while AppSignal provides deeper insights into our Django app's behavior under load.

After running Locust, you can find information about the load test in its dashboard:

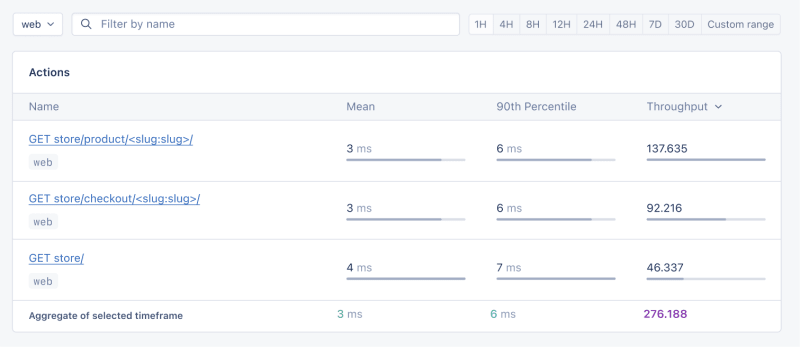

Now, go to your application page in AppSignal. Under the Performance section, click on Actions and you should see something like this:

- Mean: This is the average response time for all the requests made to a particular endpoint. It provides a general idea of how long it takes for the server to respond. In our context, any mean response time greater than 1 second could be considered a red flag, indicating that our application's performance might not meet user expectations for speed.

- 90th Percentile: This is the response time at the 90th percentile. For example, for GET store/, we have 7 ms, which means 90% of requests are completed in 7 ms or less.

- Throughput: The number of requests handled per second.

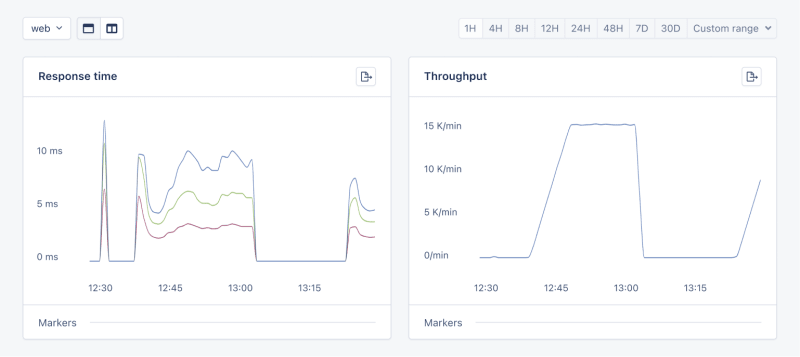

Now let's click on the Graphs under Performance:

We need to prepare our site for the New Year sales, as response times might exceed our targets. Here's a simplified plan:

- Database Queries: Slow queries often cause performance issues.

- Static Assets: Ensure static assets are properly cached. Use a CDN for better delivery speeds.

- Application Resources: Sometimes, the solution is as straightforward as adding more RAM and CPUs.

Database Operations

Understanding Database Performance Impact

When it comes to web applications, one of the most common sources of slow performance is database queries. Every time a user performs an action that requires data retrieval or manipulation, a query is made to the database.

If these queries are not well-optimized, they can take a considerable amount of time to execute, leading to a sluggish user experience. That's why it's crucial to monitor and optimize our queries, ensuring they're efficient and don't become the bottleneck in our application's performance.

Instrumentation and Spans

Before diving into the implementation, let's clarify two key concepts in performance monitoring:

- Instrumentation

- Spans

Instrumentation is the process of augmenting code to measure its performance and behavior during execution. Think of it like fitting your car with a dashboard that tells you not just the speed, but also the engine's performance, fuel efficiency, and other diagnostics while you drive.

Spans, on the other hand, are the specific segments of time measured by instrumentation. In our car analogy, a span would be the time taken for a specific part of your journey, like from your home to the highway. In the context of web applications, a span could represent the time taken to execute a database query, process a request, or complete any other discrete operation.

Instrumentation helps us create a series of spans that together form a detailed timeline of how a request is handled. This timeline is invaluable for pinpointing where delays occur and understanding the overall flow of a request through our system.

Implementing Instrumentation in Our Code

With our PurchaseProductView, we're particularly interested in the database interactions that create customer records and process purchases. By adding instrumentation to this view, we'll be able to measure these interactions and get actionable data on their performance.

Here's how we integrate AppSignal's custom instrumentation into our Django view:

# store/views.py

# Import OpenTelemetry's trace module for creating custom spans

from opentelemetry import trace

# Import AppSignal's set_root_name for customizing the trace name

from appsignal import set_root_name

# Inside the PurchaseProductView

def post(self, request, *args, **kwargs):

# Initialize the tracer for this view

tracer = trace.get_tracer(__name__)

# Start a new span for the view using 'with' statement

with tracer.start_as_current_span("PurchaseProductView"):

# Customize the name of the trace to be more descriptive

set_root_name("POST /store/purchase/<slug>")

# ... existing code to handle the purchase ...

# Start another span to monitor the database query performance

with tracer.start_as_current_span("Database Query - Retrieve or Create Customer"):

# ... code to retrieve or create a customer ...

# Yet another span to monitor the purchase record creation

with tracer.start_as_current_span("Database Query - Create Purchase Record"):

# ... code to create a purchase record ...

See the full code of the view after the modification.

In this updated view, custom instrumentation is added to measure the performance of database queries when retrieving or creating a customer and creating a purchase record.

Now, after purchasing a product in the Slow events section of the Performance dashboard, you should see the purchase event, its performance, and how long it takes to run the query.

purchase is the event we added to our view.

Using AppSignal with an Existing Django App

In this section, we are going to see how we can integrate AppSignal with Open edX, an open-source learning management system based on Python and Django.

Monitoring the performance of learning platforms like Open edX is highly important, since a slow experience directly impacts students' engagement with learning materials and can have a negative impact (for example, a high number of users might decide not to continue with a course).

Integrate AppSignal

Here, we can follow similar steps as the Project Setup section. However, for Open edX, we will follow Production Setup and initiate AppSignal in wsgi.py. Check out this commit to install and integrate AppSignal with Open edX.

Monitor Open edX Performance

Now we'll interact with our platform and see the performance result in the dashboard.

Let's register a user, log in, enroll them in multiple courses, and interact with the course content.

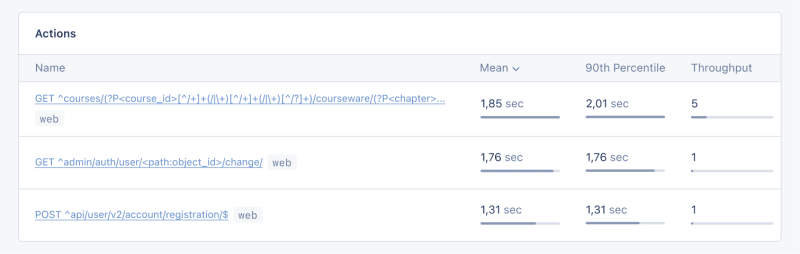

Actions

Going to Actions, let's order the actions based on their mean time and find slow events:

As we can see, for 3 events (out of the 34 events we tested) the response time is higher than 1 second.

Host Metrics

Host Metrics in AppSignal show resource usage:

我們的系統負載並不重 - 負載平均值為 0.03 - 但記憶體使用率很高。

我們也可以新增一個觸發器,以便在資源使用達到特定條件時收到通知。例如,我們可以設定一個觸發器,當記憶體使用率高於 80% 時收到通知並防止中斷。

當我們滿足條件時,您應該會收到如下所示的通知:

Celery 任務監控

在 Open edX 中,我們使用 Celery 來執行非同步和長時間運行的任務,例如憑證產生、評分和大量電子郵件功能。

根據任務和使用者數量,其中一些任務可能會運行很長時間並導致我們平台出現效能問題。

例如,如果數千名用戶註冊了一門課程,並且我們需要對他們重新評分,則此任務可能需要一段時間。我們可能會收到用戶的抱怨,他們的成績沒有反映在儀表板中,因為任務仍在運行。掌握有關 Celery 任務、運行時間和資源使用情況的資訊可以為我們提供重要的見解以及應用程式可能的改進點。

讓我們使用 AppSignal 來追蹤 Open edX 中的 Celery 任務並在儀表板中查看結果。首先,確保安裝了必要的要求。接下來,讓我們設定任務來追蹤 Celery 效能,就像此提交一樣。

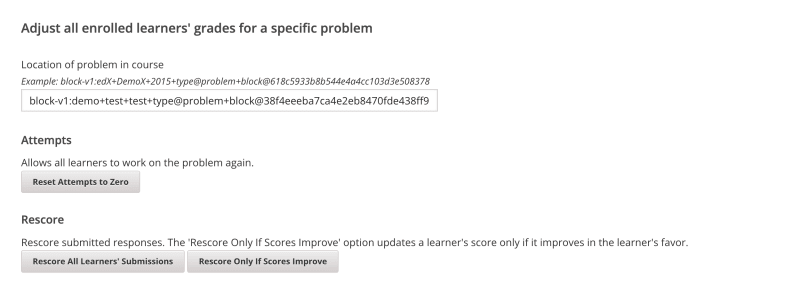

現在,讓我們在 Open edX 儀表板中執行幾個任務來重置嘗試並對學習者的提交重新評分:

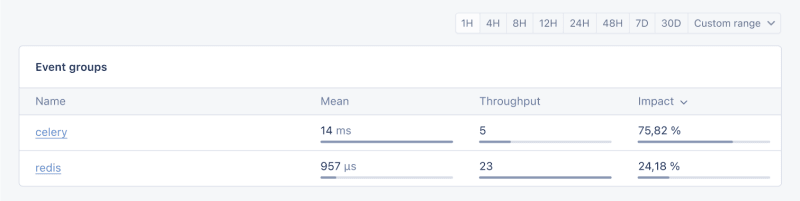

我們將轉到 AppSignal 中的 效能 儀表板 -> 緩慢事件,我們會看到類似:

透過點擊 Celery,我們將看到在 Open edX 上運行的所有任務:

這是非常有用的信息,可以幫助我們了解任務的運行時間是否比預期長,這樣我們就可以解決任何可能的效能瓶頸。

就是這樣!

總結

在本文中,我們了解了 AppSignal 如何幫助我們深入了解 Django 應用程式的效能。

我們監控了一個簡單的電子商務 Django 應用程序,包括並髮用戶、資料庫查詢和回應時間等指標。

作為案例研究,我們將 AppSignal 與 Open edX 集成,揭示了效能監控如何有助於增強使用者體驗,特別是對於使用該平台的學生而言。

編碼愉快!

P.S.如果您想在 Python 文章發布後立即閱讀,請訂閱我們的 Python Wizardry 時事通訊,不錯過任何一篇文章!

以上是使用 AppSignal 監控 Python Django 應用程式的效能的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

Python與C:學習曲線和易用性

Apr 19, 2025 am 12:20 AM

Python與C:學習曲線和易用性

Apr 19, 2025 am 12:20 AM

Python更易學且易用,C 則更強大但複雜。 1.Python語法簡潔,適合初學者,動態類型和自動內存管理使其易用,但可能導致運行時錯誤。 2.C 提供低級控制和高級特性,適合高性能應用,但學習門檻高,需手動管理內存和類型安全。

學習Python:2小時的每日學習是否足夠?

Apr 18, 2025 am 12:22 AM

學習Python:2小時的每日學習是否足夠?

Apr 18, 2025 am 12:22 AM

每天學習Python兩個小時是否足夠?這取決於你的目標和學習方法。 1)制定清晰的學習計劃,2)選擇合適的學習資源和方法,3)動手實踐和復習鞏固,可以在這段時間內逐步掌握Python的基本知識和高級功能。

Python vs.C:探索性能和效率

Apr 18, 2025 am 12:20 AM

Python vs.C:探索性能和效率

Apr 18, 2025 am 12:20 AM

Python在開發效率上優於C ,但C 在執行性能上更高。 1.Python的簡潔語法和豐富庫提高開發效率。 2.C 的編譯型特性和硬件控制提升執行性能。選擇時需根據項目需求權衡開發速度與執行效率。

Python vs. C:了解關鍵差異

Apr 21, 2025 am 12:18 AM

Python vs. C:了解關鍵差異

Apr 21, 2025 am 12:18 AM

Python和C 各有優勢,選擇應基於項目需求。 1)Python適合快速開發和數據處理,因其簡潔語法和動態類型。 2)C 適用於高性能和系統編程,因其靜態類型和手動內存管理。

Python標準庫的哪一部分是:列表或數組?

Apr 27, 2025 am 12:03 AM

Python標準庫的哪一部分是:列表或數組?

Apr 27, 2025 am 12:03 AM

pythonlistsarepartofthestAndArdLibrary,herilearRaysarenot.listsarebuilt-In,多功能,和Rused ForStoringCollections,而EasaraySaraySaraySaraysaraySaraySaraysaraySaraysarrayModuleandleandleandlesscommonlyusedDduetolimitedFunctionalityFunctionalityFunctionality。

Python:自動化,腳本和任務管理

Apr 16, 2025 am 12:14 AM

Python:自動化,腳本和任務管理

Apr 16, 2025 am 12:14 AM

Python在自動化、腳本編寫和任務管理中表現出色。 1)自動化:通過標準庫如os、shutil實現文件備份。 2)腳本編寫:使用psutil庫監控系統資源。 3)任務管理:利用schedule庫調度任務。 Python的易用性和豐富庫支持使其在這些領域中成為首選工具。

科學計算的Python:詳細的外觀

Apr 19, 2025 am 12:15 AM

科學計算的Python:詳細的外觀

Apr 19, 2025 am 12:15 AM

Python在科學計算中的應用包括數據分析、機器學習、數值模擬和可視化。 1.Numpy提供高效的多維數組和數學函數。 2.SciPy擴展Numpy功能,提供優化和線性代數工具。 3.Pandas用於數據處理和分析。 4.Matplotlib用於生成各種圖表和可視化結果。

Web開發的Python:關鍵應用程序

Apr 18, 2025 am 12:20 AM

Web開發的Python:關鍵應用程序

Apr 18, 2025 am 12:20 AM

Python在Web開發中的關鍵應用包括使用Django和Flask框架、API開發、數據分析與可視化、機器學習與AI、以及性能優化。 1.Django和Flask框架:Django適合快速開發複雜應用,Flask適用於小型或高度自定義項目。 2.API開發:使用Flask或DjangoRESTFramework構建RESTfulAPI。 3.數據分析與可視化:利用Python處理數據並通過Web界面展示。 4.機器學習與AI:Python用於構建智能Web應用。 5.性能優化:通過異步編程、緩存和代碼優