掌握數據工程的藝術以支援價值數十億美元的技術生態系統

Data reigns supreme as the currency of innovation, and it is a valuable one at that. In the multifaceted world of technology, mastering the art of data engineering has become crucial for supporting billion-dollar tech ecosystems. This sophisticated craft involves creating and maintaining data infrastructures capable of handling vast amounts of information with high reliability and efficiency.

Data reigns supreme as the currency of innovation, and it is a valuable one at that. In the multifaceted world of technology, mastering the art of data engineering has become crucial for supporting billion-dollar tech ecosystems. This sophisticated craft involves creating and maintaining data infrastructures capable of handling vast amounts of information with high reliability and efficiency.

As companies push the boundaries of innovation, the role of data engineers has never been more critical. Specialists design systems that certify seamless data flow, optimize performance, and provide the backbone for applications and services that millions of people use.

The tech ecosystem’s health lies in the capable hands of those who develop it for a living. Its growth— or collapse — all depends on how proficient one is at wielding the art of data engineering.

The Backbone of Modern Technology

Data engineering often plays the role of an unsung hero behind modern technology's seamless functionality. It involves a meticulous process of designing, constructing, and maintaining scalable data systems that can efficiently handle data's massive inflow and outflow.

These systems form the backbone of tech giants, enabling them to provide uninterrupted services to their users. Data engineering makes certain that everything runs smoothly. This encompasses aspects from e-commerce platforms processing millions of transactions per day, social media networks handling real-time updates, or navigation services providing live traffic updates.

Building Resilient Infrastructures

One of the primary challenges in data engineering is building resilient infrastructures that can withstand failures and protect data integrity. High availability environments are essential, as even minor downtimes can lead to significant disruptions and financial losses. Data engineers employ data replication, redundancy, and disaster recovery planning techniques to create robust systems.

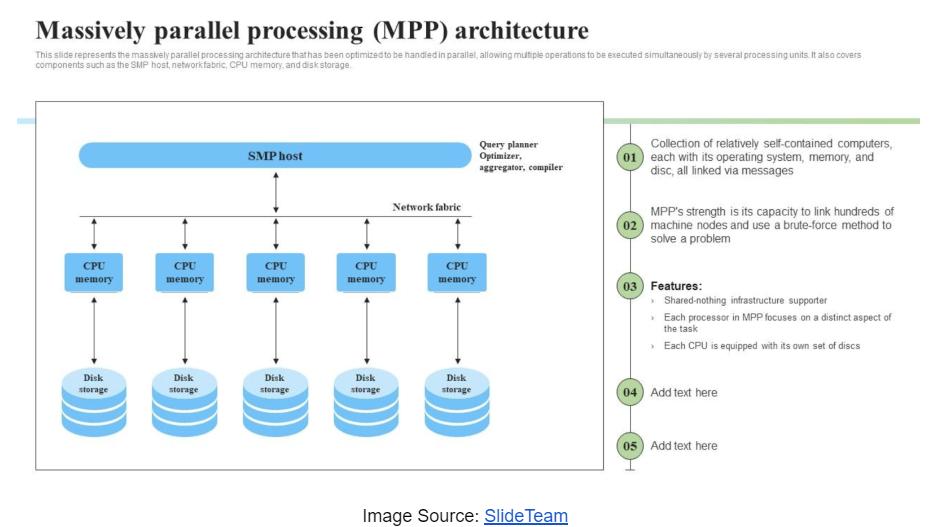

For instance, by implementing Massive Parallel Processing (MPP) architecture databases like IBM Netezza and AWS (Amazon Web Services), Redshift has redefined how companies handle large-scale data operations, providing high-speed processing and reliability.

Leveraging Massive Parallel Processing (MPP) Databases

MPP databases are a group of servers working together as one entity. The first critical component of the MPP database is how data is stored across all nodes in the cluster. A data set is split across many segments and distributed across nodes based on the table's distribution key. While it may be intuitive to split data equally on all nodes to leverage all the resources in response to user queries, there is more to it than just storing for performance — such as data skew and process skew.

Data skew occurs when data is unevenly distributed across the nodes. This means that the node carrying more data has more work than the node having less data for the same user request. The slowest node in the cluster always determines the cumulative response time of the cluster. Process skew also entails unevenly distributed data across the nodes. The difference in this situation can be found in the user's interest in data that is only stored in a few nodes. Consequently, only those specific nodes work in response to the use of query, whereas other nodes are idle (i.e., underutilization of cluster resources).

A delicate balance must be achieved between how data is stored and accessed, preventing data skew and process skew. The balance between data stored and accessed can be achieved by understanding the data access patterns. Data must be shared using the same unique key across tables, which will be used chiefly for joining data between tables. The unique key will ensure even data distribution and that the tables often joined on the same unique key end up storing the data on the same nodes. This arrangement of data will lead to a much faster local data join (co-located join) than the need to move data across nodes to join to create a final dataset.

Another performance enhancer is sorting the data during the loading process. Unlike traditional databases, MPP databases do not have an index. Instead, they eliminate unnecessary data block scans based on how the keys are sorted. Data must be loaded by defining the sort key, and user queries must use this sort key to avoid unnecessary scanning of data blocks.

Driving Innovation With Advanced Technologies

The field of data engineering never remains the same, with new technologies and methodologies emerging daily to address growing data demands. In recent years, adopting hybrid cloud solutions has become a power move.

Companies can achieve greater flexibility, scalability, and cost efficiency by taking advantage of cloud services such as AWS, Azure, and GCP. Data engineers play a crucial role in evaluating these cloud offerings, determining their suitability for specific requirements, and implementing them to fine-tune performance.

Moreover, automation and artificial intelligence (AI) are transforming data engineering, making processes more efficient by reducing human intervention. Data engineers are increasingly developing self-healing systems that detect issues and automatically take corrective actions.

This proactive outlook decreases downtime and boosts the overall reliability of data infrastructures. Additionally, exhaustive telemetry monitors systems in real-time, enabling early detection of potential problems and the generation of swift resolutions.

Navigating the Digital Tomorrows: The Internet of Things and the World of People

As data volumes continue to grow tenfold, the future of data engineering promises even more upgrades and challenges. Emerging technologies such as quantum computing and edge computing are poised to modify the field, offering unprecedented processing power and efficiency. Data engineers must be able to see these trends coming from a mile away.

As the industry moves into the future at record speed, the ingenuity of data engineers will remain a key point of the digital age, powering the applications that define both the Internet of Things and the world of people.

以上是掌握數據工程的藝術以支援價值數十億美元的技術生態系統的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)