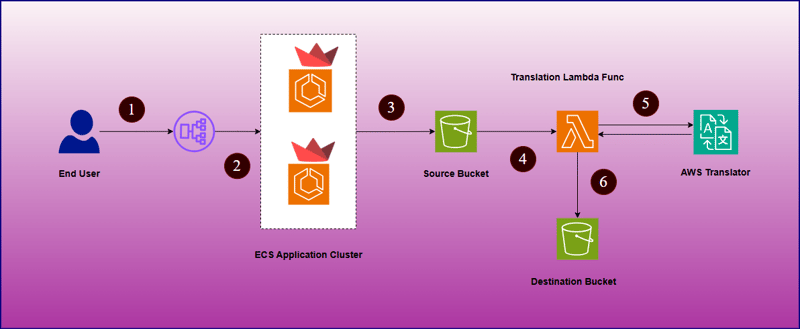

DocuTranslator,一個文件翻譯系統,內建於 AWS 中,由 Streamlit 應用程式框架開發。該應用程式允許最終用戶將文件翻譯成他們想要上傳的首選語言。它提供了根據使用者需求翻譯成多種語言的可行性,這確實幫助使用者以舒適的方式理解內容。

這個專案的目的是提供一個用戶友好、簡單的應用程式介面,以完成用戶期望的簡單翻譯過程。在此系統中,沒有人需要透過進入 AWS Translate 服務來翻譯文檔,最終使用者可以直接存取應用程式端點並滿足要求。

以上架構顯示了以下要點 -

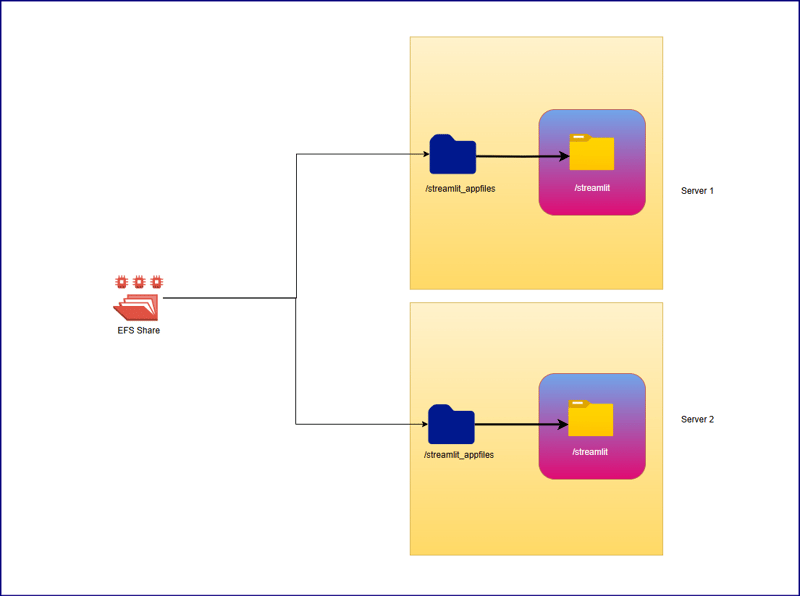

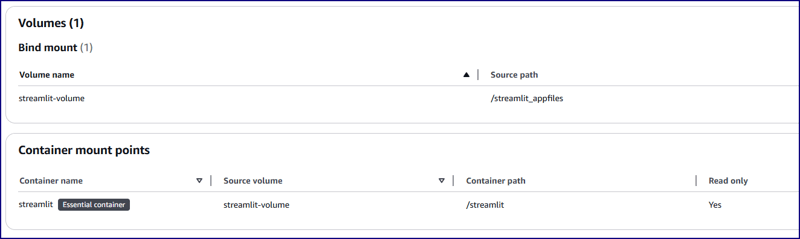

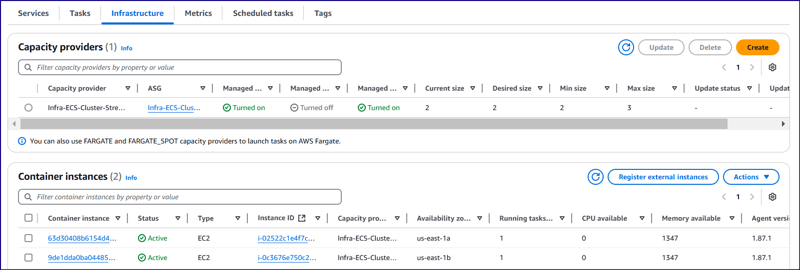

在這裡,我們使用 EFS 共用路徑在兩個底層 EC2 執行個體之間共用相同的應用程式檔案。我們在 EC2 執行個體內建立了一個掛載點 /streamlit_appfiles 並使用 EFS 共用掛載。這種方法將有助於在兩個不同的伺服器之間共享相同的內容。之後,我們的目的是創建一個複製相同的應用程式內容到容器工作目錄 /streamlit。為此,我們使用了綁定掛載,以便對 EC2 層級的應用程式程式碼進行的任何更改也將複製到容器。我們需要限制雙向複製,這意味著如果任何人錯誤地從容器內部更改程式碼,它不應該複製到 EC2 主機級別,因此容器內部工作目錄已建立為唯讀檔案系統。

底層 EC2 配置:

實例類型:t2.medium

網路類型:私有子網路

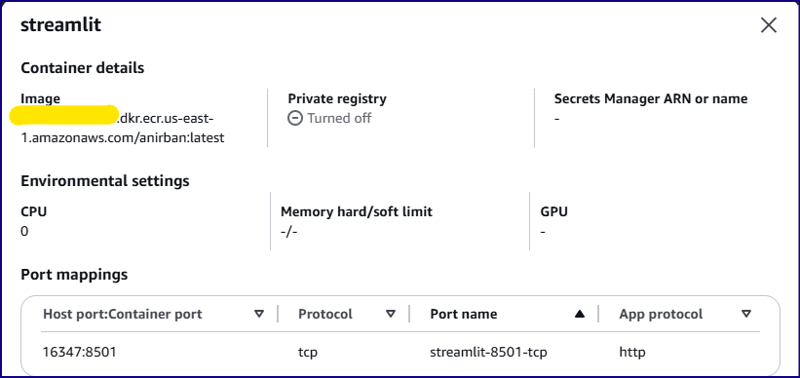

容器配置:

圖:

網路模式:預設

主機連接埠:16347

貨櫃港:8501

任務CPU:2個vCPU(2048個)

任務記憶體:2.5 GB (2560 MiB)

音量配置:

卷名:streamlit-volume

來源路徑:/streamlit_appfiles

容器路徑:/streamlit

只讀檔案系統:是

任務定義參考:

{

"taskDefinitionArn": "arn:aws:ecs:us-east-1:<account-id>:task-definition/Streamlit_TDF-1:5",

"containerDefinitions": [

{

"name": "streamlit",

"image": "<account-id>.dkr.ecr.us-east-1.amazonaws.com/anirban:latest",

"cpu": 0,

"portMappings": [

{

"name": "streamlit-8501-tcp",

"containerPort": 8501,

"hostPort": 16347,

"protocol": "tcp",

"appProtocol": "http"

}

],

"essential": true,

"environment": [],

"environmentFiles": [],

"mountPoints": [

{

"sourceVolume": "streamlit-volume",

"containerPath": "/streamlit",

"readOnly": true

}

],

"volumesFrom": [],

"ulimits": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/Streamlit_TDF-1",

"mode": "non-blocking",

"awslogs-create-group": "true",

"max-buffer-size": "25m",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

},

"secretOptions": []

},

"systemControls": []

}

],

"family": "Streamlit_TDF-1",

"taskRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"executionRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"revision": 5,

"volumes": [

{

"name": "streamlit-volume",

"host": {

"sourcePath": "/streamlit_appfiles"

}

}

],

"status": "ACTIVE",

"requiresAttributes": [

{

"name": "com.amazonaws.ecs.capability.logging-driver.awslogs"

},

{

"name": "ecs.capability.execution-role-awslogs"

},

{

"name": "com.amazonaws.ecs.capability.ecr-auth"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.19"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.28"

},

{

"name": "com.amazonaws.ecs.capability.task-iam-role"

},

{

"name": "ecs.capability.execution-role-ecr-pull"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.18"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.29"

}

],

"placementConstraints": [],

"compatibilities": [

"EC2"

],

"requiresCompatibilities": [

"EC2"

],

"cpu": "2048",

"memory": "2560",

"runtimePlatform": {

"cpuArchitecture": "X86_64",

"operatingSystemFamily": "LINUX"

},

"registeredAt": "2024-11-09T05:59:47.534Z",

"registeredBy": "arn:aws:iam::<account-id>:root",

"tags": []

}

app.py

import streamlit as st

import boto3

import os

import time

from pathlib import Path

s3 = boto3.client('s3', region_name='us-east-1')

tran = boto3.client('translate', region_name='us-east-1')

lam = boto3.client('lambda', region_name='us-east-1')

# Function to list S3 buckets

def listbuckets():

list_bucket = s3.list_buckets()

bucket_name = tuple([it["Name"] for it in list_bucket["Buckets"]])

return bucket_name

# Upload object to S3 bucket

def upload_to_s3bucket(file_path, selected_bucket, file_name):

s3.upload_file(file_path, selected_bucket, file_name)

def list_language():

response = tran.list_languages()

list_of_langs = [i["LanguageName"] for i in response["Languages"]]

return list_of_langs

def wait_for_s3obj(dest_selected_bucket, file_name):

while True:

try:

get_obj = s3.get_object(Bucket=dest_selected_bucket, Key=f'Translated-{file_name}.txt')

obj_exist = 'true' if get_obj['Body'] else 'false'

return obj_exist

except s3.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print(f"File '{file_name}' not found. Checking again in 3 seconds...")

time.sleep(3)

def download(dest_selected_bucket, file_name, file_path):

s3.download_file(dest_selected_bucket,f'Translated-{file_name}.txt', f'{file_path}/download/Translated-{file_name}.txt')

with open(f"{file_path}/download/Translated-{file_name}.txt", "r") as file:

st.download_button(

label="Download",

data=file,

file_name=f"{file_name}.txt"

)

def streamlit_application():

# Give a header

st.header("Document Translator", divider=True)

# Widgets to upload a file

uploaded_files = st.file_uploader("Choose a PDF file", accept_multiple_files=True, type="pdf")

# # upload a file

file_name = uploaded_files[0].name.replace(' ', '_') if uploaded_files else None

# Folder path

file_path = '/tmp'

# Select the bucket from drop down

selected_bucket = st.selectbox("Choose the S3 Bucket to upload file :", listbuckets())

dest_selected_bucket = st.selectbox("Choose the S3 Bucket to download file :", listbuckets())

selected_language = st.selectbox("Choose the Language :", list_language())

# Create a button

click = st.button("Upload", type="primary")

if click == True:

if file_name:

with open(f'{file_path}/{file_name}', mode='wb') as w:

w.write(uploaded_files[0].getvalue())

# Set the selected language to the environment variable of lambda function

lambda_env1 = lam.update_function_configuration(FunctionName='TriggerFunctionFromS3', Environment={'Variables': {'UserInputLanguage': selected_language, 'DestinationBucket': dest_selected_bucket, 'TranslatedFileName': file_name}})

# Upload the file to S3 bucket:

upload_to_s3bucket(f'{file_path}/{file_name}', selected_bucket, file_name)

if s3.get_object(Bucket=selected_bucket, Key=file_name):

st.success("File uploaded successfully", icon="✅")

output = wait_for_s3obj(dest_selected_bucket, file_name)

if output:

download(dest_selected_bucket, file_name, file_path)

else:

st.error("File upload failed", icon="?")

streamlit_application()

about.py

import streamlit as st

## Write the description of application

st.header("About")

about = '''

Welcome to the File Uploader Application!

This application is designed to make uploading PDF documents simple and efficient. With just a few clicks, users can upload their documents securely to an Amazon S3 bucket for storage. Here’s a quick overview

of what this app does:

**Key Features:**

- **Easy Upload:** Users can quickly upload PDF documents by selecting the file and clicking the 'Upload' button.

- **Seamless Integration with AWS S3:** Once the document is uploaded, it is stored securely in a designated S3 bucket, ensuring reliable and scalable cloud storage.

- **User-Friendly Interface:** Built using Streamlit, the interface is clean, intuitive, and accessible to all users, making the uploading process straightforward.

**How it Works:**

1. **Select a PDF Document:** Users can browse and select any PDF document from their local system.

2. **Upload the Document:** Clicking the ‘Upload’ button triggers the process of securely uploading the selected document to an AWS S3 bucket.

3. **Success Notification:** After a successful upload, users will receive a confirmation message that their document has been stored in the cloud.

This application offers a streamlined way to store documents on the cloud, reducing the hassle of manual file management. Whether you're an individual or a business, this tool helps you organize and store your

files with ease and security.

You can further customize this page by adding technical details, usage guidelines, or security measures as per your application's specifications.'''

st.markdown(about)

navigation.py

import streamlit as st

pg = st.navigation([

st.Page("app.py", title="DocuTranslator", icon="?"),

st.Page("about.py", title="About", icon="?")

], position="sidebar")

pg.run()

Dockerfile:

FROM python:3.9-slim WORKDIR /streamlit COPY requirements.txt /streamlit/requirements.txt RUN pip install --no-cache-dir -r requirements.txt RUN mkdir /tmp/download COPY . /streamlit EXPOSE 8501 CMD ["streamlit", "run", "navigation.py", "--server.port=8501", "--server.headless=true"]

Docker 檔案將透過打包所有上述應用程式設定檔來建立映像,然後將其推送到 ECR 儲存庫。 Docker Hub 也可以用來儲存映像。

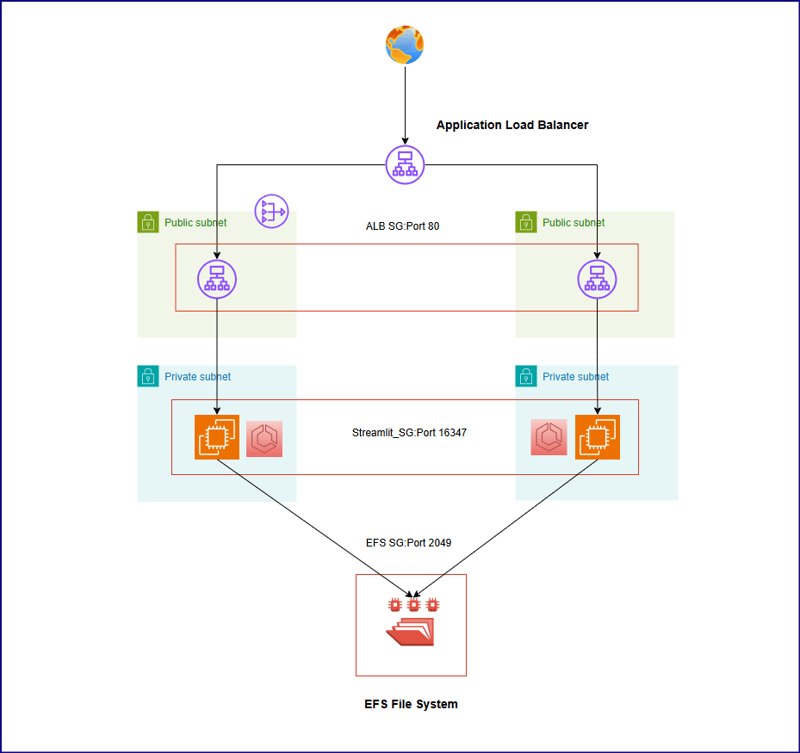

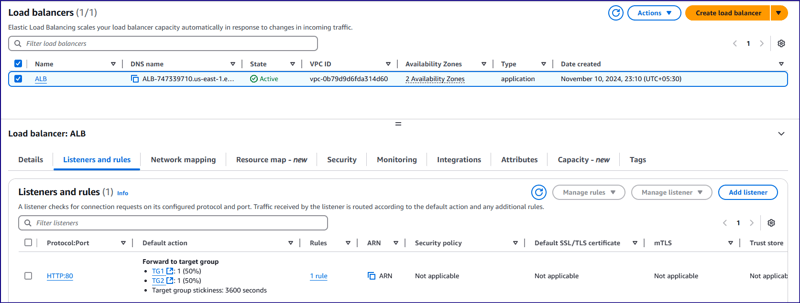

在該架構中,應用程式執行個體應該在私有子網路中創建,並且負載平衡器應該創建以減少私有 EC2 執行個體的傳入流量負載。

由於有兩個底層 EC2 主機可用於託管容器,因此在兩個 EC2 主機之間配置負載平衡以分配傳入流量。建立兩個不同的目標群組,在每個目標群組中放置兩個 EC2 實例,權重為 50%。

負載平衡器接受連接埠 80 的傳入流量,然後傳遞至連接埠 16347 處的後端 EC2 實例,並傳遞給對應的 ECS 容器。

有一個lambda 函數,配置為將來源儲存桶作為輸入,從那裡下載pdf 檔案並提取內容,然後將內容從當前語言翻譯為使用者提供的目標語言,並建立一個文字檔案以上傳到目標S3桶。

{

"taskDefinitionArn": "arn:aws:ecs:us-east-1:<account-id>:task-definition/Streamlit_TDF-1:5",

"containerDefinitions": [

{

"name": "streamlit",

"image": "<account-id>.dkr.ecr.us-east-1.amazonaws.com/anirban:latest",

"cpu": 0,

"portMappings": [

{

"name": "streamlit-8501-tcp",

"containerPort": 8501,

"hostPort": 16347,

"protocol": "tcp",

"appProtocol": "http"

}

],

"essential": true,

"environment": [],

"environmentFiles": [],

"mountPoints": [

{

"sourceVolume": "streamlit-volume",

"containerPath": "/streamlit",

"readOnly": true

}

],

"volumesFrom": [],

"ulimits": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/Streamlit_TDF-1",

"mode": "non-blocking",

"awslogs-create-group": "true",

"max-buffer-size": "25m",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

},

"secretOptions": []

},

"systemControls": []

}

],

"family": "Streamlit_TDF-1",

"taskRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"executionRoleArn": "arn:aws:iam::<account-id>:role/ecsTaskExecutionRole",

"revision": 5,

"volumes": [

{

"name": "streamlit-volume",

"host": {

"sourcePath": "/streamlit_appfiles"

}

}

],

"status": "ACTIVE",

"requiresAttributes": [

{

"name": "com.amazonaws.ecs.capability.logging-driver.awslogs"

},

{

"name": "ecs.capability.execution-role-awslogs"

},

{

"name": "com.amazonaws.ecs.capability.ecr-auth"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.19"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.28"

},

{

"name": "com.amazonaws.ecs.capability.task-iam-role"

},

{

"name": "ecs.capability.execution-role-ecr-pull"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.18"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.29"

}

],

"placementConstraints": [],

"compatibilities": [

"EC2"

],

"requiresCompatibilities": [

"EC2"

],

"cpu": "2048",

"memory": "2560",

"runtimePlatform": {

"cpuArchitecture": "X86_64",

"operatingSystemFamily": "LINUX"

},

"registeredAt": "2024-11-09T05:59:47.534Z",

"registeredBy": "arn:aws:iam::<account-id>:root",

"tags": []

}

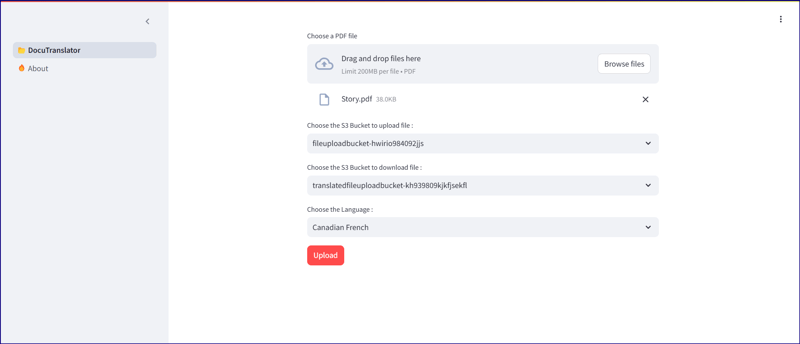

開啟應用程式負載平衡器 URL「ALB-747339710.us-east-1.elb.amazonaws.com」以開啟 Web 應用程式。瀏覽任何pdf 文件,保持來源"fileuploadbucket-hwirio984092jjs" 和目標儲存桶"translatedfileuploadbucket-kh939809kjkfjsekfl" loadbucket-kh939809kjkfjsekfl"

大程式碼就是上面提到的。選擇您想要翻譯文件的語言,然後按一下上傳。點擊後,應用程式將開始輪詢目標 S3 儲存桶以查明翻譯檔案是否已上傳。如果找到確切的文件,則會顯示一個新選項“下載”,用於從目標 S3 儲存桶下載文件。申請連結:http://alb-747339710.us-east-1.elb.amazonaws.com/

實際內容:

import streamlit as st

import boto3

import os

import time

from pathlib import Path

s3 = boto3.client('s3', region_name='us-east-1')

tran = boto3.client('translate', region_name='us-east-1')

lam = boto3.client('lambda', region_name='us-east-1')

# Function to list S3 buckets

def listbuckets():

list_bucket = s3.list_buckets()

bucket_name = tuple([it["Name"] for it in list_bucket["Buckets"]])

return bucket_name

# Upload object to S3 bucket

def upload_to_s3bucket(file_path, selected_bucket, file_name):

s3.upload_file(file_path, selected_bucket, file_name)

def list_language():

response = tran.list_languages()

list_of_langs = [i["LanguageName"] for i in response["Languages"]]

return list_of_langs

def wait_for_s3obj(dest_selected_bucket, file_name):

while True:

try:

get_obj = s3.get_object(Bucket=dest_selected_bucket, Key=f'Translated-{file_name}.txt')

obj_exist = 'true' if get_obj['Body'] else 'false'

return obj_exist

except s3.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print(f"File '{file_name}' not found. Checking again in 3 seconds...")

time.sleep(3)

def download(dest_selected_bucket, file_name, file_path):

s3.download_file(dest_selected_bucket,f'Translated-{file_name}.txt', f'{file_path}/download/Translated-{file_name}.txt')

with open(f"{file_path}/download/Translated-{file_name}.txt", "r") as file:

st.download_button(

label="Download",

data=file,

file_name=f"{file_name}.txt"

)

def streamlit_application():

# Give a header

st.header("Document Translator", divider=True)

# Widgets to upload a file

uploaded_files = st.file_uploader("Choose a PDF file", accept_multiple_files=True, type="pdf")

# # upload a file

file_name = uploaded_files[0].name.replace(' ', '_') if uploaded_files else None

# Folder path

file_path = '/tmp'

# Select the bucket from drop down

selected_bucket = st.selectbox("Choose the S3 Bucket to upload file :", listbuckets())

dest_selected_bucket = st.selectbox("Choose the S3 Bucket to download file :", listbuckets())

selected_language = st.selectbox("Choose the Language :", list_language())

# Create a button

click = st.button("Upload", type="primary")

if click == True:

if file_name:

with open(f'{file_path}/{file_name}', mode='wb') as w:

w.write(uploaded_files[0].getvalue())

# Set the selected language to the environment variable of lambda function

lambda_env1 = lam.update_function_configuration(FunctionName='TriggerFunctionFromS3', Environment={'Variables': {'UserInputLanguage': selected_language, 'DestinationBucket': dest_selected_bucket, 'TranslatedFileName': file_name}})

# Upload the file to S3 bucket:

upload_to_s3bucket(f'{file_path}/{file_name}', selected_bucket, file_name)

if s3.get_object(Bucket=selected_bucket, Key=file_name):

st.success("File uploaded successfully", icon="✅")

output = wait_for_s3obj(dest_selected_bucket, file_name)

if output:

download(dest_selected_bucket, file_name, file_path)

else:

st.error("File upload failed", icon="?")

streamlit_application()

翻譯內容(加拿大法文)

import streamlit as st

## Write the description of application

st.header("About")

about = '''

Welcome to the File Uploader Application!

This application is designed to make uploading PDF documents simple and efficient. With just a few clicks, users can upload their documents securely to an Amazon S3 bucket for storage. Here’s a quick overview

of what this app does:

**Key Features:**

- **Easy Upload:** Users can quickly upload PDF documents by selecting the file and clicking the 'Upload' button.

- **Seamless Integration with AWS S3:** Once the document is uploaded, it is stored securely in a designated S3 bucket, ensuring reliable and scalable cloud storage.

- **User-Friendly Interface:** Built using Streamlit, the interface is clean, intuitive, and accessible to all users, making the uploading process straightforward.

**How it Works:**

1. **Select a PDF Document:** Users can browse and select any PDF document from their local system.

2. **Upload the Document:** Clicking the ‘Upload’ button triggers the process of securely uploading the selected document to an AWS S3 bucket.

3. **Success Notification:** After a successful upload, users will receive a confirmation message that their document has been stored in the cloud.

This application offers a streamlined way to store documents on the cloud, reducing the hassle of manual file management. Whether you're an individual or a business, this tool helps you organize and store your

files with ease and security.

You can further customize this page by adding technical details, usage guidelines, or security measures as per your application's specifications.'''

st.markdown(about)

本文向我們展示了文件翻譯過程如何像我們想像的那樣簡單,最終用戶必須單擊一些選項來選擇所需的信息,並在幾秒鐘內獲得所需的輸出,而無需考慮配置。目前,我們已經包含了翻譯 pdf 文件的單一功能,但稍後我們將對此進行更多研究,以便在單一應用程式中具有多種功能,並具有一些有趣的功能。

以上是使用 Streamlit 和 AWS Translator 的文件翻譯服務的詳細內容。更多資訊請關注PHP中文網其他相關文章!