擴散+超解析度模型強強聯合,Google影像生成器Imagen背後的技術

近年來,多模態學習受到重視,特別是文字 - 圖像合成和圖像 - 文字對比學習兩個方向。一些 AI 模型因在創意圖像生成、編輯方面的應用引起了公眾的廣泛關注,例如 OpenAI 先後推出的文本圖像模型 DALL・E 和 DALL-E 2,以及英偉達的 GauGAN 和 GauGAN2。

Google也不甘落後,在 5 月底發布了自己的文字到圖像模型 Imagen,看起來進一步拓展了字幕條件(caption-conditional)圖像生成的邊界。

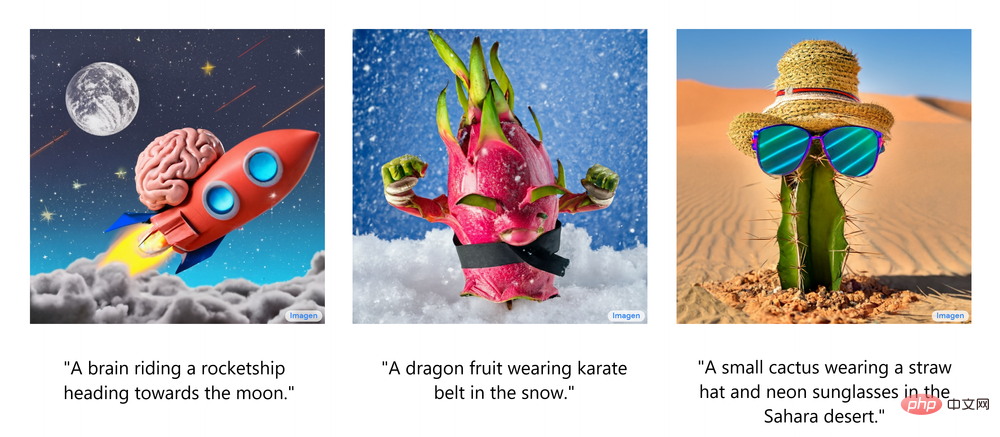

光是給出一個場景的描述,Imagen 就能產生高品質、高解析度的影像,無論這種場景在現實世界中是否合乎邏輯。下圖為 Imagen 文字產生圖像的幾個範例,在圖像下方顯示出了相應的字幕。

這些令人印象深刻的生成圖像不禁讓人想了解:Imagen 到底是如何運作的呢?

近期,開發者講師Ryan O'Connor 在AssemblyAI 部落格撰寫了一篇長文《How Imagen Actually Works》,詳細解讀了Imagen 的工作原理,對Imagen 進行了概覽介紹,分析並理解其高級組件以及它們之間的關聯。

Imagen 工作原理概覽

在這部分,作者展示了Imagen 的整體架構,並對其它的工作原理做了高級解讀;然後依次更透徹地剖析了Imagen 的每個組件。如下動圖為 Imagen 的工作流程。

首先,將字幕輸入到文字編碼器。此編碼器將文字字幕轉換成數值表示,後者將語意訊息封裝在文字中。 Imagen 中的文字編碼器是一個 Transformer 編碼器,其確保文字編碼能夠理解字幕中的單字如何彼此關聯,這裡使用自註意力方法。

如果 Imagen 只關注單字而不是它們之間的關聯,雖然可以獲得能夠捕獲字幕各個元素的高品質圖像,但描述這些圖像時無法以恰當的方式反映字幕語義。如下圖範例所示,如果不考慮單字之間的關聯,就會產生截然不同的生成效果。

雖然文字編碼器為Imagen 的字幕輸入產生了有用的表示,但仍需要設計一種方法來產生使用此表示的圖像,也即圖像生成器。為此,Imagen 使用了擴散模型,它是一種生成模型,近年來得益於其在多項任務上的 SOTA 表現而廣受歡迎。

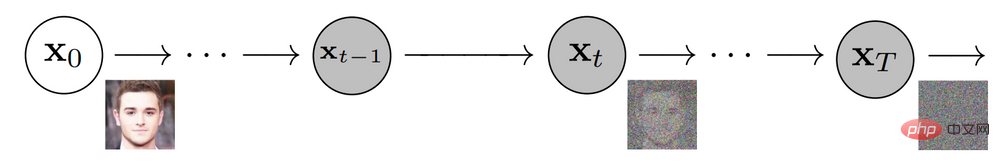

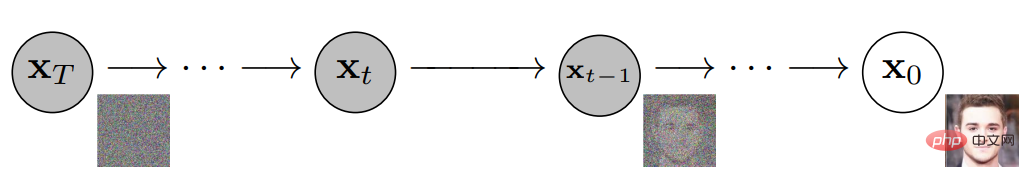

擴散模型透過添加雜訊來破壞訓練資料以實現訓練,然後透過反轉這個雜訊過程來學習恢復資料。給定輸入影像,擴散模型將在一系列時間步中迭代地利用高斯雜訊破壞影像,最終留下高斯雜訊或電視雜訊靜態(TV static)。下圖為擴散模型的迭代噪聲過程:

然後,擴散模型將向後work,學習如何在每個時間步上隔離和消除噪聲,抵消剛剛發生的破壞過程。訓練完成後,模型可以一分為二。這樣可以從隨機取樣高斯雜訊開始,使用擴散模型逐漸去噪以產生影像,如下圖所示:

In summary, the trained diffusion model starts with Gaussian noise and then iteratively generates images similar to the training images. It's obvious that there's no control over the actual output of the image, just feed Gaussian noise into the model and it will output a random image that looks like it belongs in the training dataset.

However, the goal is to create images that encapsulate the semantic information of the subtitles input to Imagen, so a way to incorporate the subtitles into the diffusion process is needed. How to do this?

As mentioned above, the text encoder produces a representative subtitle encoding, which is actually a vector sequence. To inject this encoded information into the diffusion model, these vectors are aggregated together and the diffusion model is adjusted based on them. By adjusting this vector, the diffusion model learns how to adjust its denoising process to produce images that match the subtitles well. The process visualization is shown below:

Since the image generator or base model outputs a small 64x64 image, in order to upsample this model to the final 1024x1024 version, Intelligently upsample images using super-resolution models.

For the super-resolution model, Imagen again uses the diffusion model. The overall process is basically the same as the base model, except that it is adjusted based solely on subtitle encoding, but also with smaller images being upsampled. The visualization of the entire process is as follows:

The output of this super-resolution model is not actually the final output, but a medium-sized image. To upscale this image to the final 1024x1024 resolution, another super-resolution model is used. The two super-resolution architectures are roughly the same, so they will not be described again. The output of the second super-resolution model is the final output of Imagen.

Why Imagen is better than DALL-E 2?

Answering exactly why the Imagen is better than the DALL-E 2 is difficult. However, a significant portion of the performance gap stems from subtitle and cue differences. DALL-E 2 uses contrasting targets to determine how closely text encodings relate to images (essentially CLIP). The text and image encoders adjust their parameters such that the cosine similarity of similar subtitle-image pairs is maximized, while the cosine similarity of dissimilar subtitle-image pairs is minimized.

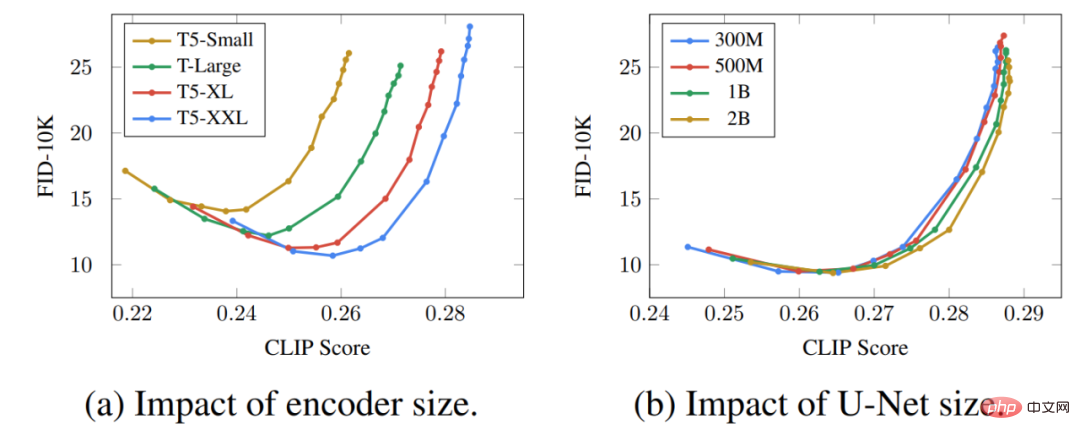

A significant part of the performance gap stems from the fact that Imagen's text encoder is much larger and trained on more data than DALL-E 2's text encoder. As evidence for this hypothesis, we can examine the performance of Imagen when the text encoder scales. Here is a Pareto curve for Imagen's performance:

The effect of upscaling text encoders is surprisingly high, while the effect of upscaling U-Net is surprisingly low. This result shows that relatively simple diffusion models can produce high-quality results as long as they are conditioned on strong encoding.

Given that the T5 text encoder is much larger than the CLIP text encoder, coupled with the fact that natural language training data is necessarily richer than image-caption pairs, much of the performance gap is likely attributable to this difference .

In addition, the author also lists several key points of Imagen, including the following:

- Expanding the text encoder is very efficient;

- Expanding the text encoder is more important than expanding the U-Net size;

- Dynamic thresholding is crucial;

- Noise condition enhancement is crucial in super-resolution models;

- It is crucial to use cross attention for text conditioning;

- Efficient U-Net is crucial.

These insights provide valuable directions for researchers who are working on diffusion models that are not only useful in the text-to-image subfield.

以上是擴散+超解析度模型強強聯合,Google影像生成器Imagen背後的技術的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

熱門話題

全球最強開源 MoE 模型來了,中文能力比肩 GPT-4,價格僅 GPT-4-Turbo 的近百分之一

May 07, 2024 pm 04:13 PM

全球最強開源 MoE 模型來了,中文能力比肩 GPT-4,價格僅 GPT-4-Turbo 的近百分之一

May 07, 2024 pm 04:13 PM

想像一下,一個人工智慧模型,不僅擁有超越傳統運算的能力,還能以更低的成本實現更有效率的效能。這不是科幻,DeepSeek-V2[1],全球最強開源MoE模型來了。 DeepSeek-V2是一個強大的專家混合(MoE)語言模型,具有訓練經濟、推理高效的特點。它由236B個參數組成,其中21B個參數用於啟動每個標記。與DeepSeek67B相比,DeepSeek-V2效能更強,同時節省了42.5%的訓練成本,減少了93.3%的KV緩存,最大生成吞吐量提高到5.76倍。 DeepSeek是一家探索通用人工智

AI顛覆數學研究!菲爾茲獎得主、華裔數學家領銜11篇頂刊論文|陶哲軒轉贊

Apr 09, 2024 am 11:52 AM

AI顛覆數學研究!菲爾茲獎得主、華裔數學家領銜11篇頂刊論文|陶哲軒轉贊

Apr 09, 2024 am 11:52 AM

AI,的確正在改變數學。最近,一直十分關注這個議題的陶哲軒,轉發了最近一期的《美國數學學會通報》(BulletinoftheAmericanMathematicalSociety)。圍繞著「機器會改變數學嗎?」這個話題,許多數學家發表了自己的觀點,全程火花四射,內容硬核,精彩紛呈。作者陣容強大,包括菲爾茲獎得主AkshayVenkatesh、華裔數學家鄭樂雋、紐大電腦科學家ErnestDavis等多位業界知名學者。 AI的世界已經發生了天翻地覆的變化,要知道,其中許多文章是在一年前提交的,而在這一

你好,電動Atlas!波士頓動力機器人復活,180度詭異動作嚇到馬斯克

Apr 18, 2024 pm 07:58 PM

你好,電動Atlas!波士頓動力機器人復活,180度詭異動作嚇到馬斯克

Apr 18, 2024 pm 07:58 PM

波士頓動力Atlas,正式進入電動機器人時代!昨天,液壓Atlas剛「含淚」退出歷史舞台,今天波士頓動力就宣布:電動Atlas上崗。看來,在商用人形機器人領域,波士頓動力是下定決心要跟特斯拉硬剛一把了。新影片放出後,短短十幾小時內,就已經有一百多萬觀看。舊人離去,新角色登場,這是歷史的必然。毫無疑問,今年是人形機器人的爆發年。網友銳評:機器人的進步,讓今年看起來像人類的開幕式動作、自由度遠超人類,但這真不是恐怖片?影片一開始,Atlas平靜地躺在地上,看起來應該是仰面朝天。接下來,讓人驚掉下巴

替代MLP的KAN,被開源專案擴展到卷積了

Jun 01, 2024 pm 10:03 PM

替代MLP的KAN,被開源專案擴展到卷積了

Jun 01, 2024 pm 10:03 PM

本月初,來自MIT等機構的研究者提出了一種非常有潛力的MLP替代方法—KAN。 KAN在準確性和可解釋性方面表現優於MLP。而且它能以非常少的參數量勝過以更大參數量運行的MLP。例如,作者表示,他們用KAN以更小的網路和更高的自動化程度重現了DeepMind的結果。具體來說,DeepMind的MLP有大約300,000個參數,而KAN只有約200個參數。 KAN與MLP一樣具有強大的數學基礎,MLP基於通用逼近定理,而KAN基於Kolmogorov-Arnold表示定理。如下圖所示,KAN在邊上具

Google狂喜:JAX性能超越Pytorch、TensorFlow!或成GPU推理訓練最快選擇

Apr 01, 2024 pm 07:46 PM

Google狂喜:JAX性能超越Pytorch、TensorFlow!或成GPU推理訓練最快選擇

Apr 01, 2024 pm 07:46 PM

谷歌力推的JAX在最近的基準測試中表現已經超過Pytorch和TensorFlow,7項指標排名第一。而且測試並不是JAX性能表現最好的TPU上完成的。雖然現在在開發者中,Pytorch依然比Tensorflow更受歡迎。但未來,也許有更多的大型模型會基於JAX平台進行訓練和運行。模型最近,Keras團隊為三個後端(TensorFlow、JAX、PyTorch)與原生PyTorch實作以及搭配TensorFlow的Keras2進行了基準測試。首先,他們為生成式和非生成式人工智慧任務選擇了一組主流

特斯拉機器人進廠打工,馬斯克:手的自由度今年將達到22個!

May 06, 2024 pm 04:13 PM

特斯拉機器人進廠打工,馬斯克:手的自由度今年將達到22個!

May 06, 2024 pm 04:13 PM

特斯拉機器人Optimus最新影片出爐,已經可以在工廠裡打工了。正常速度下,它分揀電池(特斯拉的4680電池)是這樣的:官方還放出了20倍速下的樣子——在小小的「工位」上,揀啊揀啊揀:這次放出的影片亮點之一在於Optimus在廠子裡完成這項工作,是完全自主的,全程沒有人為的干預。而且在Optimus的視角之下,它還可以把放歪了的電池重新撿起來放置,主打一個自動糾錯:對於Optimus的手,英偉達科學家JimFan給出了高度的評價:Optimus的手是全球五指機器人裡最靈巧的之一。它的手不僅有觸覺

FisheyeDetNet:首個以魚眼相機為基礎的目標偵測演算法

Apr 26, 2024 am 11:37 AM

FisheyeDetNet:首個以魚眼相機為基礎的目標偵測演算法

Apr 26, 2024 am 11:37 AM

目標偵測在自動駕駛系統當中是一個比較成熟的問題,其中行人偵測是最早得以部署演算法之一。在多數論文當中已經進行了非常全面的研究。然而,利用魚眼相機進行環視的距離感知相對來說研究較少。由於徑向畸變大,標準的邊界框表示在魚眼相機當中很難實施。為了緩解上述描述,我們探索了擴展邊界框、橢圓、通用多邊形設計為極座標/角度表示,並定義一個實例分割mIOU度量來分析這些表示。所提出的具有多邊形形狀的模型fisheyeDetNet優於其他模型,並同時在用於自動駕駛的Valeo魚眼相機資料集上實現了49.5%的mAP

DualBEV:大幅超越BEVFormer、BEVDet4D,開卷!

Mar 21, 2024 pm 05:21 PM

DualBEV:大幅超越BEVFormer、BEVDet4D,開卷!

Mar 21, 2024 pm 05:21 PM

這篇論文探討了在自動駕駛中,從不同視角(如透視圖和鳥瞰圖)準確檢測物體的問題,特別是如何有效地從透視圖(PV)到鳥瞰圖(BEV)空間轉換特徵,這一轉換是透過視覺轉換(VT)模組實施的。現有的方法大致分為兩種策略:2D到3D和3D到2D轉換。 2D到3D的方法透過預測深度機率來提升密集的2D特徵,但深度預測的固有不確定性,尤其是在遠處區域,可能會引入不準確性。而3D到2D的方法通常使用3D查詢來採樣2D特徵,並透過Transformer學習3D和2D特徵之間對應關係的注意力權重,這增加了計算和部署的