AI視訊生成框架測試競爭:Pika、Gen-2、ModelScope、SEINE,誰能勝出?

AI 影片生成,是最近最熱門的領域之一。各大學實驗室、網路巨頭 AI Lab、新創公司紛紛加入了 AI 影片生成的賽道。 Pika、Gen-2、Show-1、VideoCrafter、ModelScope、SEINE、LaVie、VideoLDM 等影片產生模型的發布,更是讓人眼睛一亮。 v⁽ⁱ⁾

大家一定對以下幾個問題感到好奇:

- ##到底哪個影片生成模型最牛?

- 每個模型有什麼專長?

- AI 影片產生領域目前還有哪些值得關注的問題待解決?

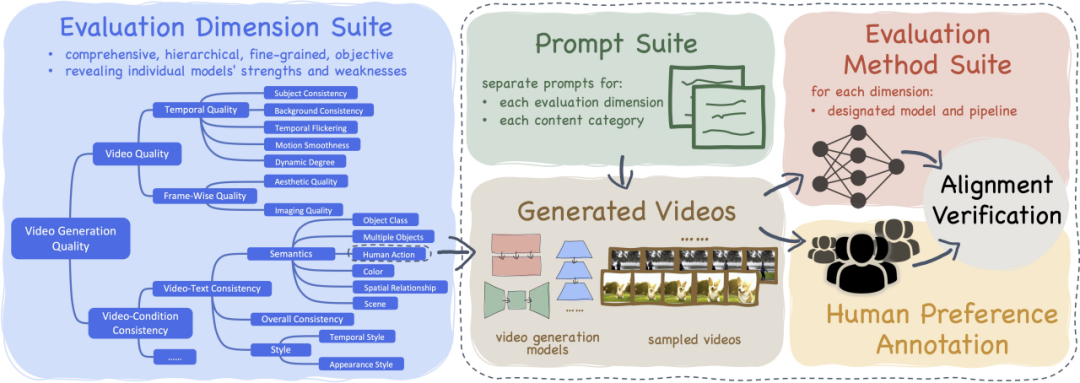

為此,我們推出了VBench,一個全面的「視訊生成模型的評測框架」,旨在向用戶提供關於各種視訊模型的優劣和特點。透過VBench,使用者可以了解不同視訊模型的強項和優勢。

- #論文:https://arxiv.org/abs /2311.17982

- #程式碼:https://github.com/Vchitect/VBench

- 網頁:https://vchitect.github.io /VBench-project/

- 論文標題:VBench: Comprehensive Benchmark Suite for Video Generative Models

#VBench不僅能全面、細緻地評估影片生成效果,也能提供符合人們感官體驗的評估,節省時間和精力。

- VBench 包含16 個分層和解耦的評測維度

- VBench 開源了用於文生視訊產生評測的Prompt List 系統

- VBench 每個維度的評測方案與人類的觀感與評估對齊

- VBench 提供了多視角的洞察,助力未來對於AI 視訊生成的探索

AI 影片產生模型- 評測結果

已開源的AI視訊生成模型

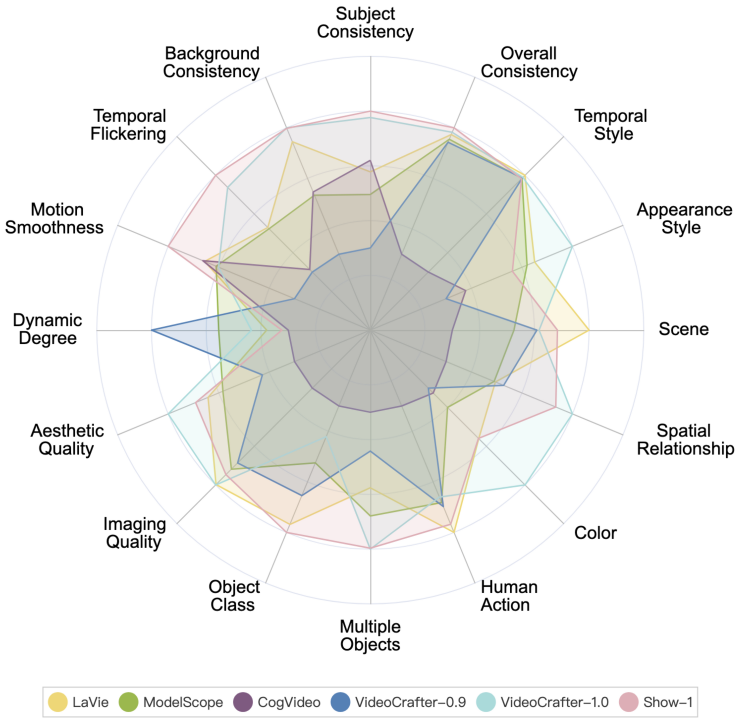

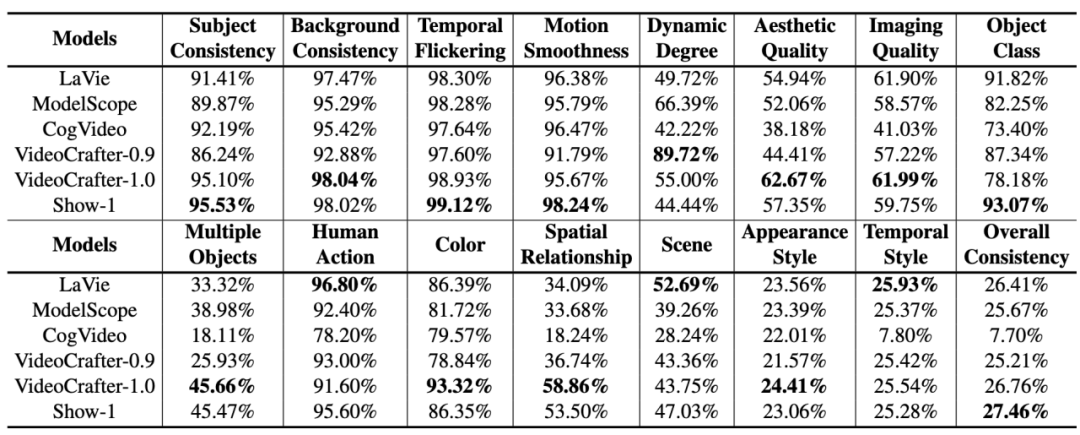

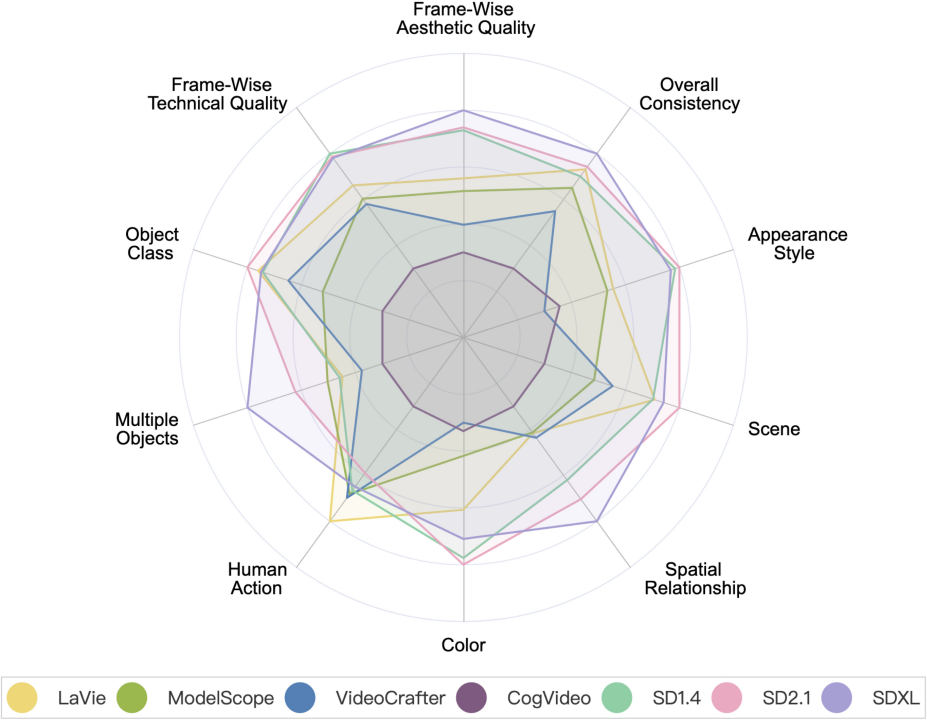

各個開源的AI 視訊產生模型在 VBench 上的表現如下。

在以上 6 個模型中,可以看到 VideoCrafter-1.0 和 Show-1 在大多數維度都有相對優勢。

新創公司的影片產生模型

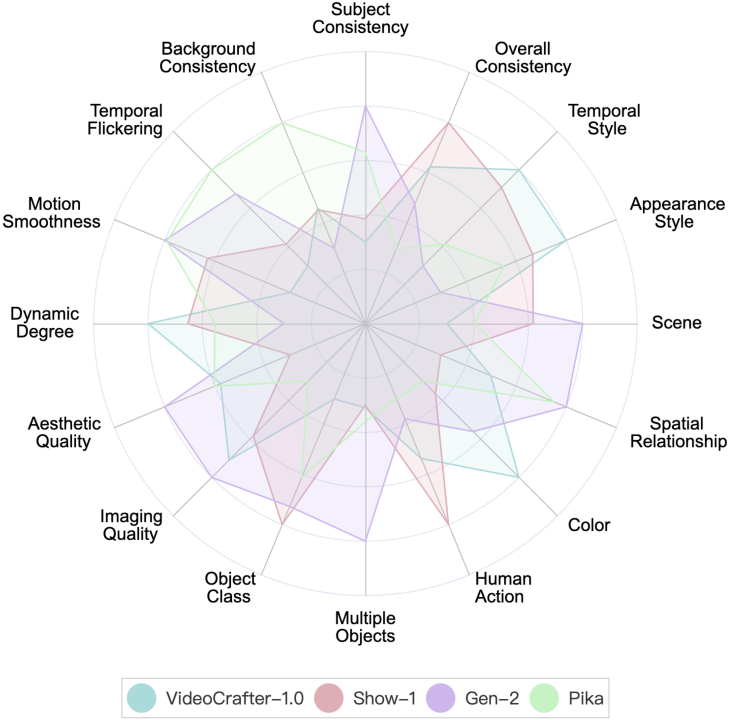

#VBench 目前給了Gen-2 和Pika 這兩家創業公司模式的評測結果。

Gen-2 和 Pika 在 VBench 上的表現。在雷達圖中,為了更清晰地視覺化比較,我們加入了 VideoCrafter-1.0 和 Show-1 作為參考,同時將每個維度的評測結果歸一化到了 0.3 與 0.8 之間。

#

#

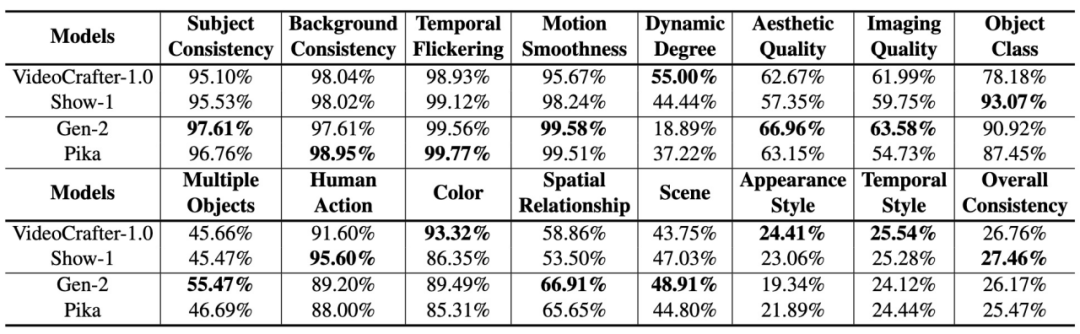

Performance of Gen-2 and Pika on VBench. We include the numerical results of VideoCrafter-1.0 and Show-1 as reference.

It can be seen that Gen-2 and Pika have obvious advantages in video quality (Video Quality), such as timing consistency (Temporal Consistency) and single frame quality (Aesthetic Quality and Imaging Quality) related dimensions. In terms of semantic consistency with user input prompts (such as Human Action and Appearance Style), partial-dimensional open source models will be better.

Video generation model VS picture generation model

Video generation model VS Image generation model. Among them, SD1.4, SD2.1 and SDXL are image generation models.

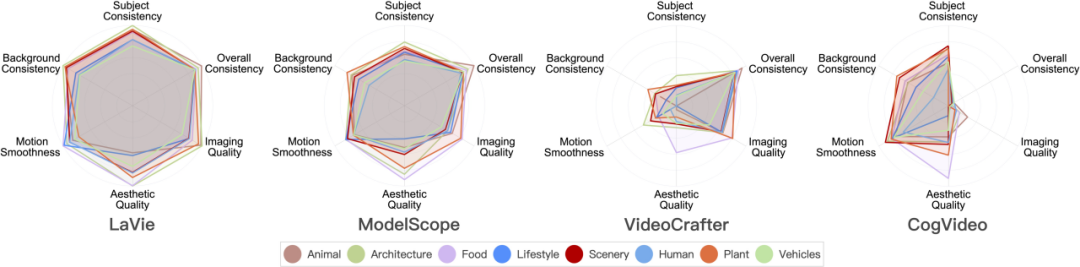

The performance of the video generation model in 8 major scene categories

The following are the performance of different models in 8 different categories evaluation results on.

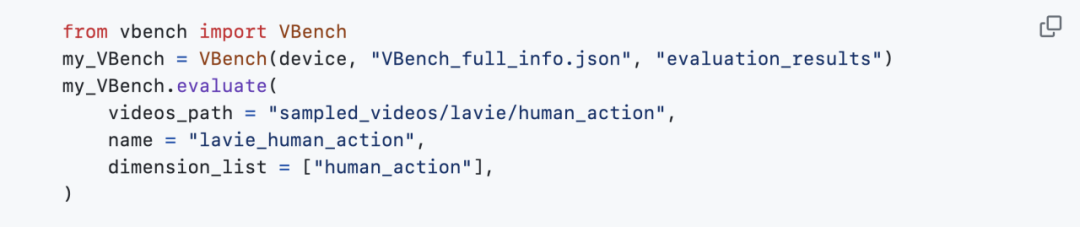

VBench is now open source and can be installed with one click

At present, VBench is fully open source. And supports one-click installation. Everyone is welcome to play, test the models you are interested in, and work together to promote the development of the video generation community.

#Open source address :https://github.com/Vchitect/VBench

We have also open sourced a series of Prompt Lists : https://github.com/Vchitect/VBench/tree/master/prompts, including Benchmarks for evaluation in different capability dimensions, as well as evaluation Benchmarks on different scenario content.

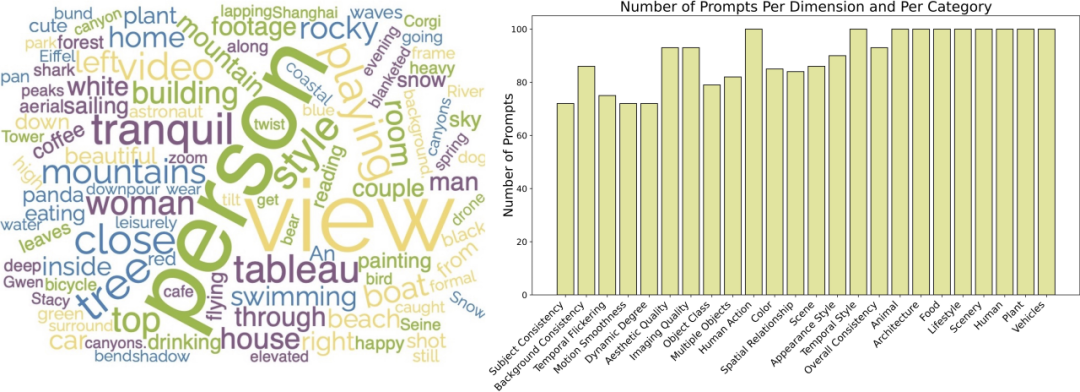

The word cloud on the left shows the distribution of high-frequency words in our Prompt Suites, and the picture on the right shows the statistics of the number of prompts in different dimensions and categories.

Is VBench accurate?

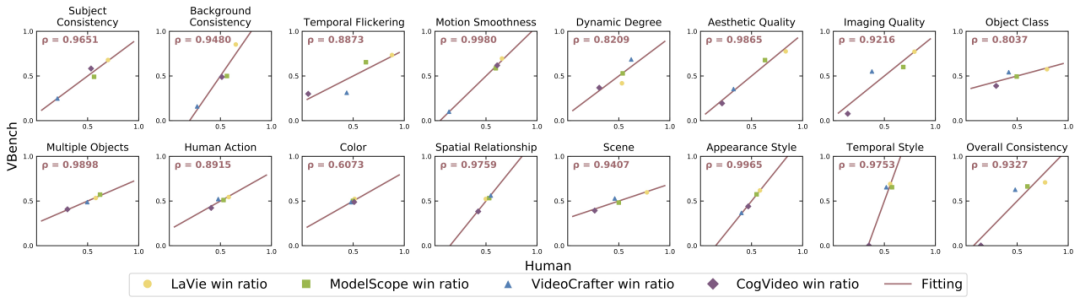

For each dimension, we calculated the correlation between the VBench evaluation results and the manual evaluation results to verify the consistency of our method with human perception. In the figure below, the horizontal axis represents the manual evaluation results in different dimensions, and the vertical axis shows the results of the automatic evaluation of the VBench method. It can be seen that our method is highly aligned with human perception in all dimensions.

VBench brings thinking to AI video generation

VBench can not only evaluate existing models , More importantly, various problems that may exist in different models can also be discovered, providing valuable insights for the future development of AI video generation.

"Temporal continuity" and "video dynamic level": Don't choose one or the other, but improve both

We found that there is a certain trade-off relationship between temporal coherence (such as Subject Consistency, Background Consistency, Motion Smoothness) and the amplitude of motion in the video (Dynamic Degree). For example, Show-1 and VideoCrafter-1.0 performed very well in terms of background consistency and action smoothness, but scored lower in terms of dynamics; this may be because the generated "not moving" pictures are more likely to appear "in the timing" Very coherent." VideoCrafter-0.9, on the other hand, is weaker on the dimension related to timing consistency, but scores high on Dynamic Degree.

This shows that it is indeed difficult to achieve "temporal coherence" and "higher dynamic level" at the same time; in the future, we should not only focus on improving one aspect, but should also improve "temporal coherence" And "the dynamic level of the video", this is meaningful.

Evaluate by scene content to explore the potential of each model

Some models perform well in different categories There are big differences in performance. For example, in terms of aesthetic quality, CogVideo performs well in the "Food" category, but scores lower in the "LifeStyle" category. If the training data is adjusted, can the aesthetic quality of CogVideo in the "LifeStyle" categories be improved, thereby improving the overall video aesthetic quality of the model?

This also tells us that when evaluating video generation models, we need to consider the performance of the model under different categories or topics, explore the upper limit of the model in a certain capability dimension, and then target Improve the "holding back" scenario category.

Categories with complex motion: poor spatiotemporal performance

Categories with high spatial complexity, Scores in the aesthetic quality dimension are relatively low. For example, the "LifeStyle" category has relatively high requirements for the layout of complex elements in space, and the "Human" category poses challenges due to the generation of hinged structures.

For categories with complex timing, such as the "Human" category which usually involves complex movements and the "Vehicle" category which often moves faster, they score equally in all tested dimensions. relatively low. This shows that the current model still has certain deficiencies in processing temporal modeling. The temporal modeling limitations may lead to spatial blurring and distortion, resulting in unsatisfactory video quality in both time and space.

Difficult to generate categories: little benefit from increasing data volume

We use the commonly used video data set WebVid- 10M conducted statistics and found that about 26% of the data was related to "Human", accounting for the highest proportion among the eight categories we counted. However, in the evaluation results, the “Human” category was one of the worst performing among the eight categories.

This shows that for a complex category like "Human", simply increasing the amount of data may not bring significant improvements to performance. One potential method is to guide the learning of the model by introducing "Human" related prior knowledge or control, such as Skeletons, etc.

Millions of data sets: improving data quality takes precedence over data quantity

Although the "Food" category Occupying only 11% of WebVid-10M, it almost always has the highest aesthetic quality score in the review. So we further analyzed the aesthetic quality performance of different categories of content in the WebVid-10M data set and found that the "Food" category also had the highest aesthetic score in WebVid-10M.

This means that on the basis of millions of data, filtering/improving data quality is more helpful than increasing the amount of data.

Ability to be improved: Accurately generate multiple objects and the relationship between objects

Current video generation The model still cannot catch up with the image generation model (especially SDXL) in terms of "Multiple Objects" and "Spatial Relationship", which highlights the importance of improving combination capabilities. The so-called combination ability refers to whether the model can accurately display multiple objects in video generation, as well as the spatial and interactive relationships between them.

Potential solutions to this problem may include:

- Data labeling: Construct a video dataset to provide A clear description of multiple objects, as well as a description of the spatial positional relationships and interactions between objects.

- Add intermediate modes/modules during the video generation process to assist in controlling the combination and spatial position of objects.

- Using a better text encoder (Text Encoder) will also have a greater impact on the combined generation ability of the model.

- Curve to save the country: hand over the "object combination" problem that T2V cannot do well to T2I, and generate videos through T2I I2V. This approach may also be effective for many other video generation problems.

以上是AI視訊生成框架測試競爭:Pika、Gen-2、ModelScope、SEINE,誰能勝出?的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

Video Face Swap

使用我們完全免費的人工智慧換臉工具,輕鬆在任何影片中換臉!

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

C 中的chrono庫如何使用?

Apr 28, 2025 pm 10:18 PM

C 中的chrono庫如何使用?

Apr 28, 2025 pm 10:18 PM

使用C 中的chrono庫可以讓你更加精確地控制時間和時間間隔,讓我們來探討一下這個庫的魅力所在吧。 C 的chrono庫是標準庫的一部分,它提供了一種現代化的方式來處理時間和時間間隔。對於那些曾經飽受time.h和ctime折磨的程序員來說,chrono無疑是一個福音。它不僅提高了代碼的可讀性和可維護性,還提供了更高的精度和靈活性。讓我們從基礎開始,chrono庫主要包括以下幾個關鍵組件:std::chrono::system_clock:表示系統時鐘,用於獲取當前時間。 std::chron

如何理解C 中的DMA操作?

Apr 28, 2025 pm 10:09 PM

如何理解C 中的DMA操作?

Apr 28, 2025 pm 10:09 PM

DMA在C 中是指DirectMemoryAccess,直接內存訪問技術,允許硬件設備直接與內存進行數據傳輸,不需要CPU干預。 1)DMA操作高度依賴於硬件設備和驅動程序,實現方式因係統而異。 2)直接訪問內存可能帶來安全風險,需確保代碼的正確性和安全性。 3)DMA可提高性能,但使用不當可能導致系統性能下降。通過實踐和學習,可以掌握DMA的使用技巧,在高速數據傳輸和實時信號處理等場景中發揮其最大效能。

怎樣在C 中處理高DPI顯示?

Apr 28, 2025 pm 09:57 PM

怎樣在C 中處理高DPI顯示?

Apr 28, 2025 pm 09:57 PM

在C 中處理高DPI顯示可以通過以下步驟實現:1)理解DPI和縮放,使用操作系統API獲取DPI信息並調整圖形輸出;2)處理跨平台兼容性,使用如SDL或Qt的跨平台圖形庫;3)進行性能優化,通過緩存、硬件加速和動態調整細節級別來提升性能;4)解決常見問題,如模糊文本和界面元素過小,通過正確應用DPI縮放來解決。

C 中的實時操作系統編程是什麼?

Apr 28, 2025 pm 10:15 PM

C 中的實時操作系統編程是什麼?

Apr 28, 2025 pm 10:15 PM

C 在實時操作系統(RTOS)編程中表現出色,提供了高效的執行效率和精確的時間管理。 1)C 通過直接操作硬件資源和高效的內存管理滿足RTOS的需求。 2)利用面向對象特性,C 可以設計靈活的任務調度系統。 3)C 支持高效的中斷處理,但需避免動態內存分配和異常處理以保證實時性。 4)模板編程和內聯函數有助於性能優化。 5)實際應用中,C 可用於實現高效的日誌系統。

怎樣在C 中測量線程性能?

Apr 28, 2025 pm 10:21 PM

怎樣在C 中測量線程性能?

Apr 28, 2025 pm 10:21 PM

在C 中測量線程性能可以使用標準庫中的計時工具、性能分析工具和自定義計時器。 1.使用庫測量執行時間。 2.使用gprof進行性能分析,步驟包括編譯時添加-pg選項、運行程序生成gmon.out文件、生成性能報告。 3.使用Valgrind的Callgrind模塊進行更詳細的分析,步驟包括運行程序生成callgrind.out文件、使用kcachegrind查看結果。 4.自定義計時器可靈活測量特定代碼段的執行時間。這些方法幫助全面了解線程性能,並優化代碼。

給MySQL表添加和刪除字段的操作步驟

Apr 29, 2025 pm 04:15 PM

給MySQL表添加和刪除字段的操作步驟

Apr 29, 2025 pm 04:15 PM

在MySQL中,添加字段使用ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column,刪除字段使用ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop。添加字段時,需指定位置以優化查詢性能和數據結構;刪除字段前需確認操作不可逆;使用在線DDL、備份數據、測試環境和低負載時間段修改表結構是性能優化和最佳實踐。

量化交易所排行榜2025 數字貨幣量化交易APP前十名推薦

Apr 30, 2025 pm 07:24 PM

量化交易所排行榜2025 數字貨幣量化交易APP前十名推薦

Apr 30, 2025 pm 07:24 PM

交易所內置量化工具包括:1. Binance(幣安):提供Binance Futures量化模塊,低手續費,支持AI輔助交易。 2. OKX(歐易):支持多賬戶管理和智能訂單路由,提供機構級風控。獨立量化策略平台有:3. 3Commas:拖拽式策略生成器,適用於多平台對沖套利。 4. Quadency:專業級算法策略庫,支持自定義風險閾值。 5. Pionex:內置16 預設策略,低交易手續費。垂直領域工具包括:6. Cryptohopper:雲端量化平台,支持150 技術指標。 7. Bitsgap:

deepseek官網是如何實現鼠標滾動事件穿透效果的?

Apr 30, 2025 pm 03:21 PM

deepseek官網是如何實現鼠標滾動事件穿透效果的?

Apr 30, 2025 pm 03:21 PM

如何實現鼠標滾動事件穿透效果?在我們瀏覽網頁時,經常會遇到一些特別的交互設計。比如在deepseek官網上,�...