谷歌Gemini1.5火速上線:MoE架構,100萬上下文

今天,Google宣布推出 Gemini 1.5。

Gemini 1.5是在Google基礎模型和基礎設施的研究與工程創新基礎上開發的。這個版本引入了新的專家混合(MoE)架構,以提高Gemini 1.5的訓練和服務的效率。

Google推出的是早期測試的Gemini 1.5的第一個版本,即Gemini 1.5 Pro。它是一種中型多模態模型,主要針對多種任務進行了擴展優化。與Google最大的模型1.0 Ultra相比,Gemini 1.5 Pro的性能水準相似,並引入了突破性的實驗特徵,能夠更好地理解長上下文。

Gemini 1.5 Pro的token上下文視窗數量為128,000個。然而,Google從今天開始,為少數開發人員和企業客戶提供了AI Studio和Vertex AI的私人預覽版,允許他們在最多1,000,000個token的上下文視窗中進行嘗試。此外,Google還進行了一些優化,旨在改善延遲、減少計算要求並提升用戶體驗。

Google CEO Sundar Pichai 和Google DeepMind CEO Demis Hassabis 對新模型進行了專門介紹。

Gemini 1.5 builds on Google’s leading research into Transformer and MoE architectures. The traditional Transformer acts as one large neural network, while the MoE model is divided into smaller "expert" neural networks.

Depending on the type of input given, the MoE model learns to selectively activate only the most relevant expert paths in its neural network. This specialization greatly increases the efficiency of the model. Google has been an early adopter and pioneer of deep learning MoE technology through research on sparse gated MoE, GShard-Transformer, Switch-Transformer, M4, and more.

Google’s latest innovations in model architecture enable Gemini 1.5 to learn complex tasks faster and maintain quality, while training and serving more efficiently. These efficiencies are helping Google teams iterate, train, and deliver more advanced versions of Gemini faster than ever before, and are working on further optimizations.

" of artificial intelligence models" "Context windows" are composed of tokens, which are the building blocks for processing information. A token can be an entire part or subpart of text, image, video, audio, or code. The larger the model's context window, the more information it can receive and process in a given prompt, making its output more consistent, relevant, and useful.

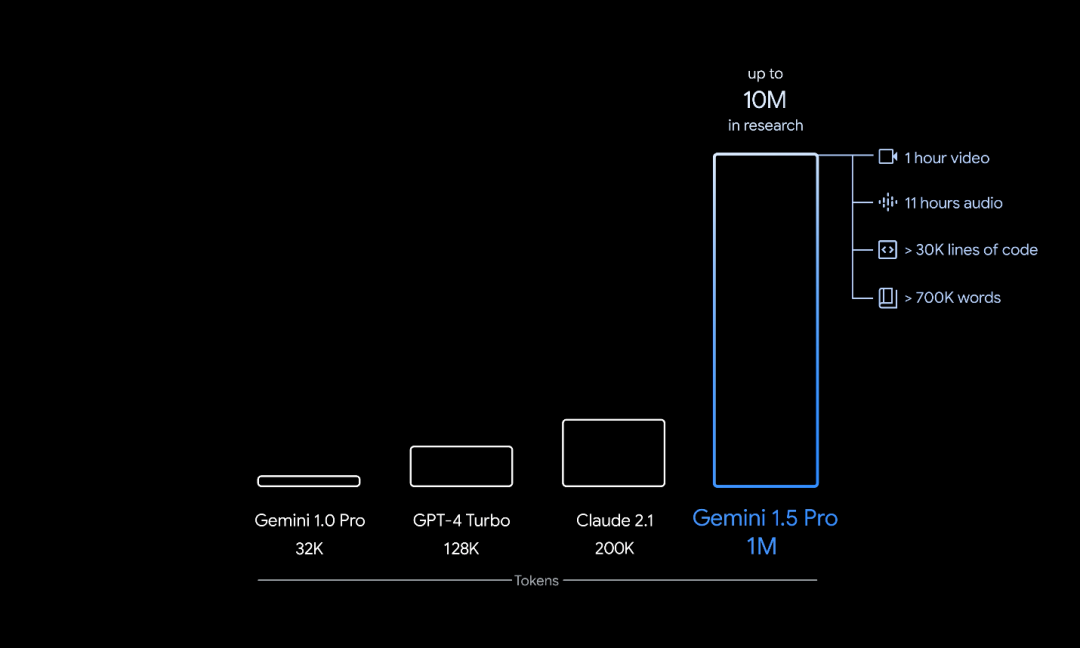

Through a series of machine learning innovations, Google has increased the context window capacity of 1.5 Pro well beyond the original 32,000 tokens of Gemini 1.0. The large model can now run in production with up to 1 million tokens.

This means the 1.5 Pro can handle large amounts of information at once, including 1 hour of video, 11 hours of audio, over 30,000 lines of code, or a code base of over 700,000 words . In Google's research, up to 10 million tokens were also successfully tested.

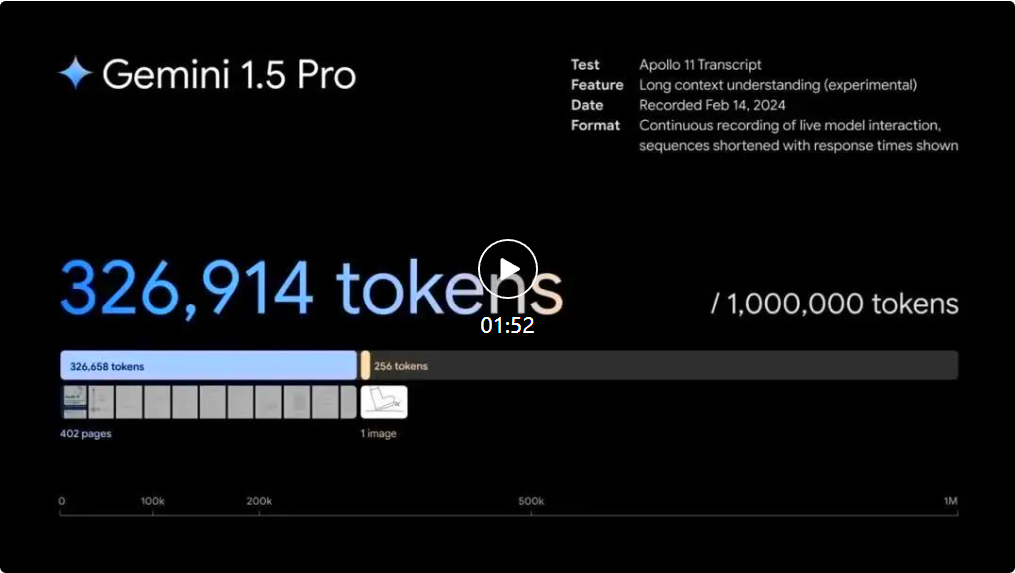

1.5 Pro Can perform within a given prompt Seamlessly analyze, categorize and summarize large amounts of content. For example, when given a 402-page transcript of the Apollo 11 moon landing mission, it could reason about dialogue, events, and details throughout the document.

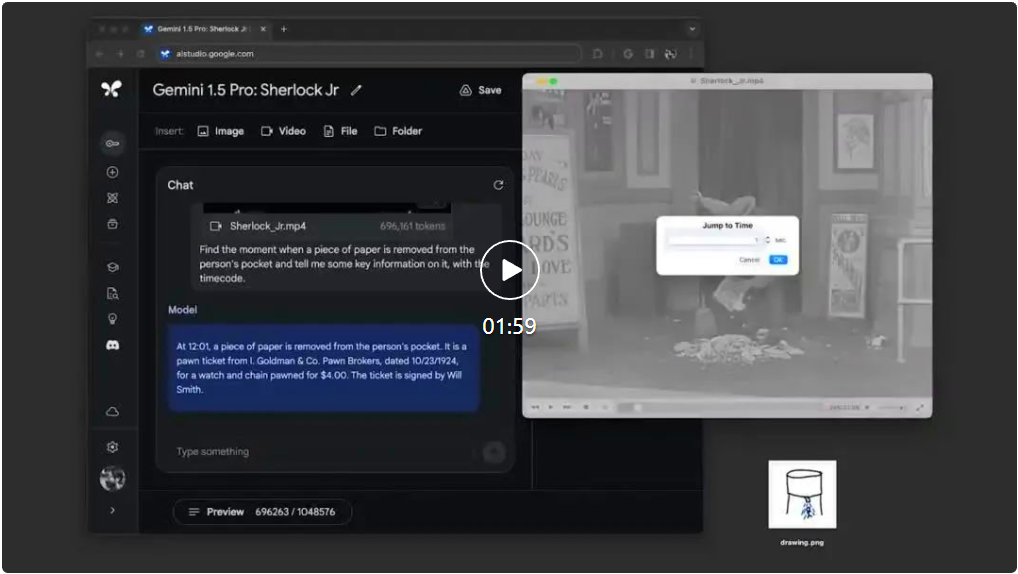

1.5 Pro can perform highly complex understanding and reasoning tasks across different modalities, including video. For example, when given a 44-minute silent film by Buster Keaton, the model could accurately analyze various plot points and events, even reasoning about small details in the film that were easily overlooked.

1.5 Pro Can perform highly complex understanding and reasoning tasks on different modalities including video. For example, when given a 44-minute silent film by Buster Keaton, the model could accurately analyze various plot points and events, even reasoning about small details in the film that were easily overlooked.

1.5 Pro Can perform highly complex understanding and reasoning tasks on different modalities including video. For example, when given a 44-minute silent film by Buster Keaton, the model could accurately analyze various plot points and events, even reasoning about small details in the film that were easily overlooked.

The Gemini 1.5 Pro could identify 44 minutes of scenes from Buster Keaton's silent films when given simple line drawings as reference material for real-life objects.

The Gemini 1.5 Pro could identify 44 minutes of scenes from Buster Keaton's silent films when given simple line drawings as reference material for real-life objects.

Gemini 1.5 Pro maintains a high level of performance even as the context window increases.

In the NIAH assessment, where a small piece of text containing a specific fact or statement was intentionally placed within a very long block of text, 1.5 Pro found the embedding 99% of the time The text of , there are only 1 million tokens in the data block.

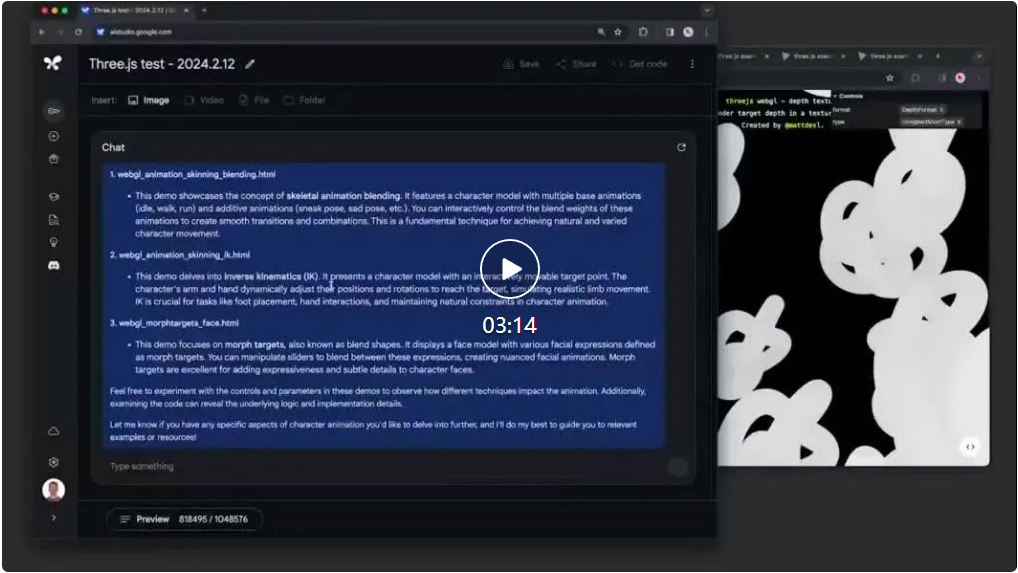

Gemini 1.5 Pro also demonstrates impressive "in-context learning" skills, meaning it can learn from long prompts Learn new skills from information without the need for additional fine-tuning. Google tested this skill on the MTOB (Translation from One Book) benchmark, which shows the model's ability to learn from information it has never seen before. When given a grammar manual for Kalamang, a language with fewer than 200 speakers worldwide, the model can learn to translate English into Kalamang at a level similar to a human learning the same content.

Since 1.5 Pro’s long context window is a first for a large model, Google is constantly developing new evaluations and benchmarks to test its novel features.

For more details, see the Gemini 1.5 Pro Technical Report.

Technical report address: https://storage.googleapis.com/deepmind-media/gemini/gemini_v1_5_report.pdf

Starting today, Google is making 1.5 Pro preview available to developers and enterprise customers through AI Studio and Vertex AI.

In the future, when the model goes to wider release, Google will launch 1.5 Pro with a standard 128,000 token context window. Soon, Google plans to introduce pricing tiers starting with the standard 128,000 context windows and scaling up to 1 million tokens as it improves the model.

Early testers can try 1 million token context windows for free during testing, and significant speed improvements are coming.

Developers interested in testing 1.5 Pro can register now in AI Studio, while enterprise customers can contact their Vertex AI account team.

以上是谷歌Gemini1.5火速上線:MoE架構,100萬上下文的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

熱門話題

2025幣圈交易所平台哪個好 十大熱門貨幣交易app最新推薦

Mar 25, 2025 pm 06:18 PM

2025幣圈交易所平台哪個好 十大熱門貨幣交易app最新推薦

Mar 25, 2025 pm 06:18 PM

2025幣圈交易所平台排名:1. OKX,2. Binance,3. Gate.io,4. Coinbase,5. Kraken,6. Huobi Global,7. Crypto.com,8. KuCoin,9. Gemini,10. Bitstamp。這些平台在安全措施、用戶評價和市場表現方面表現優異,適合用戶選擇進行數字貨幣交易。

歐易okex賬號怎麼註冊、使用、註銷教程

Mar 31, 2025 pm 04:21 PM

歐易okex賬號怎麼註冊、使用、註銷教程

Mar 31, 2025 pm 04:21 PM

本文詳細介紹了歐易OKEx賬號的註冊、使用和註銷流程。註冊需下載APP,輸入手機號或郵箱註冊,完成實名認證。使用方面涵蓋登錄、充值提現、交易以及安全設置等操作步驟。而註銷賬號則需要聯繫歐易OKEx客服,提供必要信息並等待處理,最終獲得賬號註銷確認。 通過本文,用戶可以輕鬆掌握歐易OKEx賬號的完整生命週期管理,安全便捷地進行數字資產交易。

2025年安全好用的虛擬幣交易平台榜單匯總

Mar 25, 2025 pm 06:15 PM

2025年安全好用的虛擬幣交易平台榜單匯總

Mar 25, 2025 pm 06:15 PM

2025年安全好用的虚拟币交易平台推荐,本文汇总了Binance、OKX、火币、Gate.io、Coinbase、Kraken、KuCoin、Bitfinex、Crypto.com和Gemini等十个全球主流虚拟货币交易平台。它们在交易对数量、24小时成交额、安全性、用户体验等方面各有优势,例如Binance交易速度快,OKX期货交易热门,Coinbase适合新手,Kraken则以安全性著称。 但需注意,虚拟货币交易风险极高,投资需谨慎,中国大陆地区不受法律保护。选择平台前请务必仔细评估自身风

2025數字貨幣交易所APP哪個好 十大虛擬幣app交易所排行

Mar 25, 2025 pm 06:06 PM

2025數字貨幣交易所APP哪個好 十大虛擬幣app交易所排行

Mar 25, 2025 pm 06:06 PM

2025年安全的數字貨幣App交易所排名:1. OKX,2. Binance,3. Gate.io,4. Coinbase,5. Kraken,6. Huobi Global,7. Crypto.com,8. KuCoin,9. Gemini,10. Bitstamp。這些平台在安全措施、用戶評價和市場表現方面表現優異,適合用戶選擇進行數字貨幣交易。

以太坊正規交易平台最新匯總2025

Mar 26, 2025 pm 04:45 PM

以太坊正規交易平台最新匯總2025

Mar 26, 2025 pm 04:45 PM

2025年,選擇“正規”的以太坊交易平台意味著安全、合規、透明。 持牌經營、資金安全、透明運營、AML/KYC、數據保護和公平交易是關鍵。 Coinbase、Kraken、Gemini 等合規交易所值得關注。 幣安和歐易有機會通過加強合規性成為正規平台。 DeFi 是一個選擇,但也存在風險。 務必關注安全性、合規性、費用,分散風險,備份私鑰,並進行自己的研究 。

2025全球十大加密貨幣交易所最新排名

Mar 26, 2025 pm 05:09 PM

2025全球十大加密貨幣交易所最新排名

Mar 26, 2025 pm 05:09 PM

要預測2025年加密貨幣交易所的排名很困難,因為市場變化迅速。重要的不是具體的排名,而是要了解影響排名的因素:監管合規、機構投資、DeFi整合、用戶體驗、安全性和全球化。 Binance、Coinbase、Kraken等都有望進入前十,但也可能出現黑天鵝事件。 關注市場趨勢和交易所的動態,不要盲信排名,投資前做好調研。

如何優化jieba分詞以改善景區評論的關鍵詞提取效果?

Apr 01, 2025 pm 06:24 PM

如何優化jieba分詞以改善景區評論的關鍵詞提取效果?

Apr 01, 2025 pm 06:24 PM

如何優化jieba分詞以改善景區評論的關鍵詞提取?在使用jieba分詞處理景區評論數據時,如果發現分詞結果不理�...

十大虛擬數字貨幣交易所 最新貨幣交易平台app排行2025年

Mar 25, 2025 pm 06:30 PM

十大虛擬數字貨幣交易所 最新貨幣交易平台app排行2025年

Mar 25, 2025 pm 06:30 PM

2025年安全的數字貨幣App交易所排名:1. OKX,2. Binance,3. Gate.io,4. Coinbase,5. Kraken,6. Huobi Global,7. Crypto.com,8. KuCoin,9. Gemini,10. Bitstamp。這些平台在安全措施、用戶評價和市場表現方面表現優異,適合用戶選擇進行數字貨幣交易。