JS 的 AI 時代來了!

JS-Torch 簡介

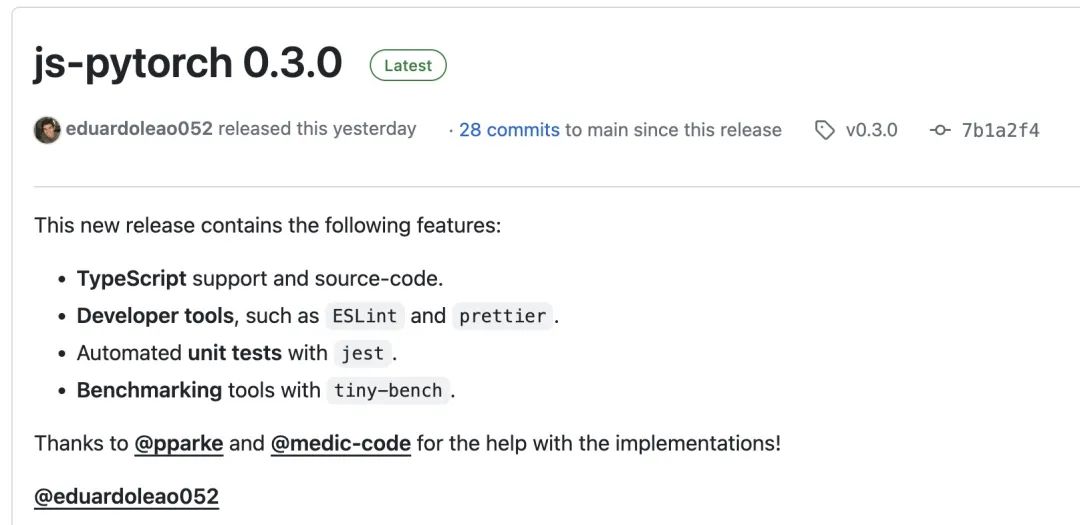

JS-Torch是一種深度學習JavaScript函式庫,其語法與PyTorch非常相似。它包含一個功能齊全的張量物件(可與追蹤梯度),深度學習層和函數,以及一個自動微分引擎。 JS-Torch適用於在JavaScript中進行深度學習研究,並提供了許多方便的工具和函數來加速深度學習開發。

圖片

圖片

PyTorch是一個開源的深度學習框架,由Meta的研究團隊開發和維護。它提供了豐富的工具和函式庫,用於建立和訓練神經網路模型。 PyTorch的設計理念是簡單和靈活,易於使用,它的動態計算圖特性使得模型構建更加直觀和靈活,同時也提高了模型構建和調試的效率。 PyTorch的動態計算圖特性也使得其模型建構更加直觀,便於調試和最佳化。此外,PyTorch還具有良好的可擴展性和運作效率,使得其在深度學習領域中廣受歡迎和應用。

你可以透過npm 或pnpm 來安裝 js-pytorch:

npm install js-pytorchpnpm add js-pytorch

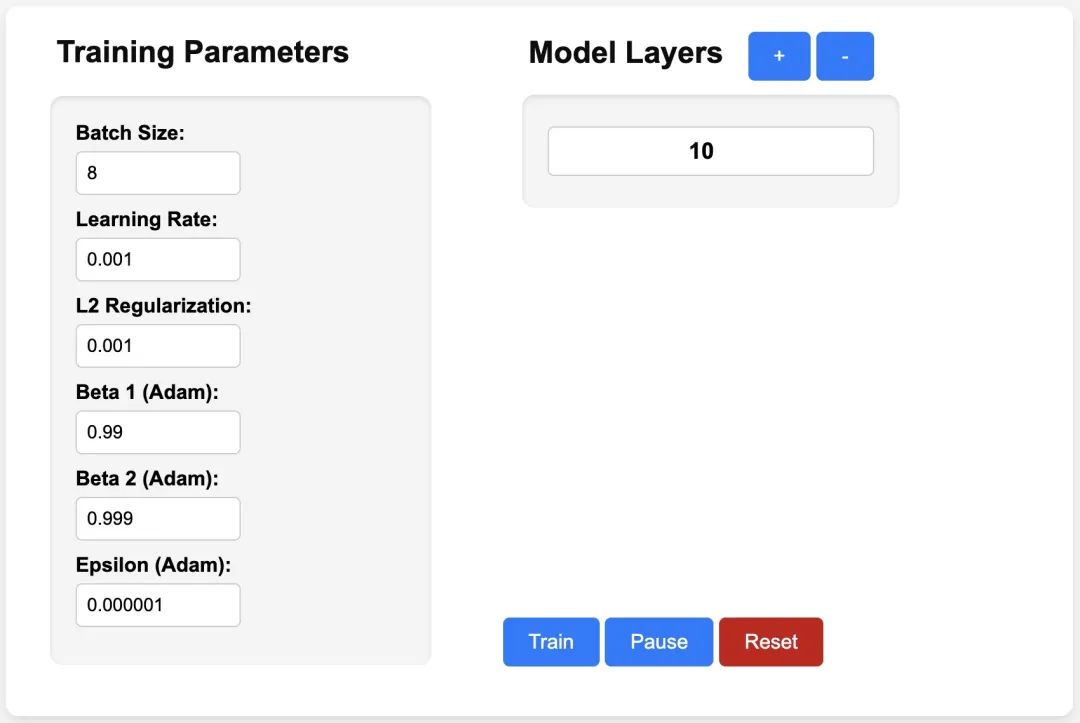

或線上體驗 js-pytorch 提供的 Demo[3]:

圖片

圖片

https://eduardoleao052.github.io/js-torch/assets/demo/demo.html

#JS-Torch 已支援的功能

目前 JS-Torch 已經支援Add、Subtract、Multiply、Divide 等張量操作,同時也支援Linear、MultiHeadSelfAttention、ReLU 和LayerNorm 等常用的深度學習層。

Tensor Operations

- Add

- Subtract

- Multiply

- Divide

- Matrix Multiply

- Power

- Square Root

- Exponentiate

- Log

- Sum

- Mean

- Variance

- Transpose

- At

- MaskedFill

- Reshape

Deep Learning Layers

- nn.Linear

- nn.MultiHeadSelfAttention

- nn.FullyConnected

- nn.Block

- nn.Embedding

- #nn.PositionalEmbedding

- # nn.ReLU

- nn.Softmax

- nn.Dropout

- #nn.LayerNorm

- nn.CrossEntropyLoss

#JS- Torch 使用範例

Simple Autograd

import { torch } from "js-pytorch";// Instantiate Tensors:let x = torch.randn([8, 4, 5]);let w = torch.randn([8, 5, 4], (requires_grad = true));let b = torch.tensor([0.2, 0.5, 0.1, 0.0], (requires_grad = true));// Make calculations:let out = torch.matmul(x, w);out = torch.add(out, b);// Compute gradients on whole graph:out.backward();// Get gradients from specific Tensors:console.log(w.grad);console.log(b.grad);Complex Autograd (Transformer)

import { torch } from "js-pytorch";const nn = torch.nn;class Transformer extends nn.Module {constructor(vocab_size, hidden_size, n_timesteps, n_heads, p) {super();// Instantiate Transformer's Layers:this.embed = new nn.Embedding(vocab_size, hidden_size);this.pos_embed = new nn.PositionalEmbedding(n_timesteps, hidden_size);this.b1 = new nn.Block(hidden_size,hidden_size,n_heads,n_timesteps,(dropout_p = p));this.b2 = new nn.Block(hidden_size,hidden_size,n_heads,n_timesteps,(dropout_p = p));this.ln = new nn.LayerNorm(hidden_size);this.linear = new nn.Linear(hidden_size, vocab_size);}forward(x) {let z;z = torch.add(this.embed.forward(x), this.pos_embed.forward(x));z = this.b1.forward(z);z = this.b2.forward(z);z = this.ln.forward(z);z = this.linear.forward(z);return z;}}// Instantiate your custom nn.Module:const model = new Transformer(vocab_size,hidden_size,n_timesteps,n_heads,dropout_p);// Define loss function and optimizer:const loss_func = new nn.CrossEntropyLoss();const optimizer = new optim.Adam(model.parameters(), (lr = 5e-3), (reg = 0));// Instantiate sample input and output:let x = torch.randint(0, vocab_size, [batch_size, n_timesteps, 1]);let y = torch.randint(0, vocab_size, [batch_size, n_timesteps]);let loss;// Training Loop:for (let i = 0; i < 40; i++) {// Forward pass through the Transformer:let z = model.forward(x);// Get loss:loss = loss_func.forward(z, y);// Backpropagate the loss using torch.tensor's backward() method:loss.backward();// Update the weights:optimizer.step();// Reset the gradients to zero after each training step:optimizer.zero_grad();}有了 JS-Torch 之後,在Node.js、Deno 等JS Runtime 上跑AI 應用的日子越來越近了。當然,JS-Torch 要推廣起來,它還需要解決一個很重要的問題,就是 GPU 加速。目前已有相關的討論,如果你有興趣的話,可以進一步閱讀相關內容:GPU Support[4] 。

參考資料

[1]JS-Torch: https://github.com/eduardoleao052/js-torch

[2]PyTorch: https://pytorch .org/

[3]Demo: https://eduardoleao052.github.io/js-torch/assets/demo/demo.html

[4]GPU Support: https:/ /github.com/eduardoleao052/js-torch/issues/1

以上是JS 的 AI 時代來了!的詳細內容。更多資訊請關注PHP中文網其他相關文章!

熱AI工具

Undresser.AI Undress

人工智慧驅動的應用程序,用於創建逼真的裸體照片

AI Clothes Remover

用於從照片中去除衣服的線上人工智慧工具。

Undress AI Tool

免費脫衣圖片

Clothoff.io

AI脫衣器

AI Hentai Generator

免費產生 AI 無盡。

熱門文章

熱工具

記事本++7.3.1

好用且免費的程式碼編輯器

SublimeText3漢化版

中文版,非常好用

禪工作室 13.0.1

強大的PHP整合開發環境

Dreamweaver CS6

視覺化網頁開發工具

SublimeText3 Mac版

神級程式碼編輯軟體(SublimeText3)

熱門話題

mysql 無法啟動怎麼解決

Apr 08, 2025 pm 02:21 PM

mysql 無法啟動怎麼解決

Apr 08, 2025 pm 02:21 PM

MySQL啟動失敗的原因有多種,可以通過檢查錯誤日誌進行診斷。常見原因包括端口衝突(檢查端口占用情況並修改配置)、權限問題(檢查服務運行用戶權限)、配置文件錯誤(檢查參數設置)、數據目錄損壞(恢復數據或重建表空間)、InnoDB表空間問題(檢查ibdata1文件)、插件加載失敗(檢查錯誤日誌)。解決問題時應根據錯誤日誌進行分析,找到問題的根源,並養成定期備份數據的習慣,以預防和解決問題。

了解 ACID 屬性:可靠數據庫的支柱

Apr 08, 2025 pm 06:33 PM

了解 ACID 屬性:可靠數據庫的支柱

Apr 08, 2025 pm 06:33 PM

數據庫ACID屬性詳解ACID屬性是確保數據庫事務可靠性和一致性的一組規則。它們規定了數據庫系統處理事務的方式,即使在系統崩潰、電源中斷或多用戶並發訪問的情況下,也能保證數據的完整性和準確性。 ACID屬性概述原子性(Atomicity):事務被視為一個不可分割的單元。任何部分失敗,整個事務回滾,數據庫不保留任何更改。例如,銀行轉賬,如果從一個賬戶扣款但未向另一個賬戶加款,則整個操作撤銷。 begintransaction;updateaccountssetbalance=balance-100wh

mysql 能返回 json 嗎

Apr 08, 2025 pm 03:09 PM

mysql 能返回 json 嗎

Apr 08, 2025 pm 03:09 PM

MySQL 可返回 JSON 數據。 JSON_EXTRACT 函數可提取字段值。對於復雜查詢,可考慮使用 WHERE 子句過濾 JSON 數據,但需注意其性能影響。 MySQL 對 JSON 的支持在不斷增強,建議關注最新版本及功能。

掌握SQL LIMIT子句:控制查詢中的行數

Apr 08, 2025 pm 07:00 PM

掌握SQL LIMIT子句:控制查詢中的行數

Apr 08, 2025 pm 07:00 PM

SQLLIMIT子句:控制查詢結果行數SQL中的LIMIT子句用於限制查詢返回的行數,這在處理大型數據集、分頁顯示和測試數據時非常有用,能有效提升查詢效率。語法基本語法:SELECTcolumn1,column2,...FROMtable_nameLIMITnumber_of_rows;number_of_rows:指定返回的行數。帶偏移量的語法:SELECTcolumn1,column2,...FROMtable_nameLIMIToffset,number_of_rows;offset:跳過

如何針對高負載應用程序優化 MySQL 性能?

Apr 08, 2025 pm 06:03 PM

如何針對高負載應用程序優化 MySQL 性能?

Apr 08, 2025 pm 06:03 PM

MySQL數據庫性能優化指南在資源密集型應用中,MySQL數據庫扮演著至關重要的角色,負責管理海量事務。然而,隨著應用規模的擴大,數據庫性能瓶頸往往成為製約因素。本文將探討一系列行之有效的MySQL性能優化策略,確保您的應用在高負載下依然保持高效響應。我們將結合實際案例,深入講解索引、查詢優化、數據庫設計以及緩存等關鍵技術。 1.數據庫架構設計優化合理的數據庫架構是MySQL性能優化的基石。以下是一些核心原則:選擇合適的數據類型選擇最小的、符合需求的數據類型,既能節省存儲空間,又能提升數據處理速度

使用 Prometheus MySQL Exporter 監控 MySQL 和 MariaDB Droplet

Apr 08, 2025 pm 02:42 PM

使用 Prometheus MySQL Exporter 監控 MySQL 和 MariaDB Droplet

Apr 08, 2025 pm 02:42 PM

有效監控 MySQL 和 MariaDB 數據庫對於保持最佳性能、識別潛在瓶頸以及確保整體系統可靠性至關重要。 Prometheus MySQL Exporter 是一款強大的工具,可提供對數據庫指標的詳細洞察,這對於主動管理和故障排除至關重要。

mysql 主鍵可以為 null

Apr 08, 2025 pm 03:03 PM

mysql 主鍵可以為 null

Apr 08, 2025 pm 03:03 PM

MySQL 主鍵不可以為空,因為主鍵是唯一標識數據庫中每一行的關鍵屬性,如果主鍵可以為空,則無法唯一標識記錄,將會導致數據混亂。使用自增整型列或 UUID 作為主鍵時,應考慮效率和空間佔用等因素,選擇合適的方案。

Navicat查看MongoDB數據庫密碼的方法

Apr 08, 2025 pm 09:39 PM

Navicat查看MongoDB數據庫密碼的方法

Apr 08, 2025 pm 09:39 PM

直接通過 Navicat 查看 MongoDB 密碼是不可能的,因為它以哈希值形式存儲。取回丟失密碼的方法:1. 重置密碼;2. 檢查配置文件(可能包含哈希值);3. 檢查代碼(可能硬編碼密碼)。