用多進程爬取資料寫入文件,運行沒有報錯,但是開啟文件卻亂碼。

##用多執行緒改寫時卻沒有這個問題,一切正常。

下面是資料寫入檔案的程式碼:

def Get_urls(start_page,end_page):

print ' run task {} ({})'.format(start_page,os.getpid())

url_text = codecs.open('url.txt','a','utf-8')

for i in range(start_page,end_page+1):

pageurl=baseurl1+str(i)+baseurl2+searchword

response = requests.get(pageurl, headers=header)

soup = BeautifulSoup(response.content, 'html.parser')

a_list=soup.find_all('a')

for a in a_list:

if a.text!=''and 'wssd_content.jsp?bookid'in a['href']:

text=a.text.strip()

url=baseurl+str(a['href'])

url_text.write(text+'\t'+url+'\n')

url_text.close()多進程用的進程池

def Multiple_processes_test():

t1 = time.time()

print 'parent process {} '.format(os.getpid())

page_ranges_list = [(1,3),(4,6),(7,9)]

pool = multiprocessing.Pool(processes=3)

for page_range in page_ranges_list:

pool.apply_async(func=Get_urls,args=(page_range[0],page_range[1]))

pool.close()

pool.join()

t2 = time.time()

print '时间:',t2-t1

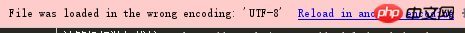

圖片上已經說了,檔案以錯誤的編碼形式載入了,說明你多進程寫入的時候,編碼不是utf-8

文件第一行新增:

開啟同一個檔案,相當危險,出錯機率相當大,

多執行緒不出錯,極有可能是GIL,

多進程沒有鎖,因此容易出錯了。

建議改為生產者消費都模式!

比如這樣

結果

foo /4/wssd_content.jsp?bookid

foo /5/wssd_content.jsp?bookid

foo /6/wssd_content.jsp?bookid

foo /1/wssd_content.jsp?bookid

f /2/2/片

foo /3/wssd_content.jsp?bookid

foo /7/wssd_content.jsp?bookid

foo /8/wssd_content.jsp?bookid

foo /9/wssd_content.jsp?bookid