Real-time Profiling a MongoDB Cluster

by A. Jesse Jiryu Davis, Python Evangelist at 10gen In a sharded cluster of replica sets, which server or servers handle each of your queries? What about each insert, update, or command? If you know how a MongoDB cluster routes operations

by A. Jesse Jiryu Davis, Python Evangelist at 10gen

In a sharded cluster of replica sets, which server or servers handle each of your queries? What about each insert, update, or command? If you know how a MongoDB cluster routes operations among its servers, you can predict how your application will scale as you add shards and add members to shards.

Operations are routed according to the type of operation, your shard key, and your read preference. Let’s set up a cluster and use the system profiler to see where each operation is run. This is an interactive, experimental way to learn how your cluster really behaves and how your architecture will scale.

Setup

You’ll need a recent install of MongoDB (I’m using 2.4.4), Python, a recent version of PyMongo (at least 2.4—I’m using 2.5.2) and the code in my cluster-profile repository on GitHub. If you install the Colorama Python package you’ll get cute colored output. These scripts were tested on my Mac.

Sharded cluster of replica sets

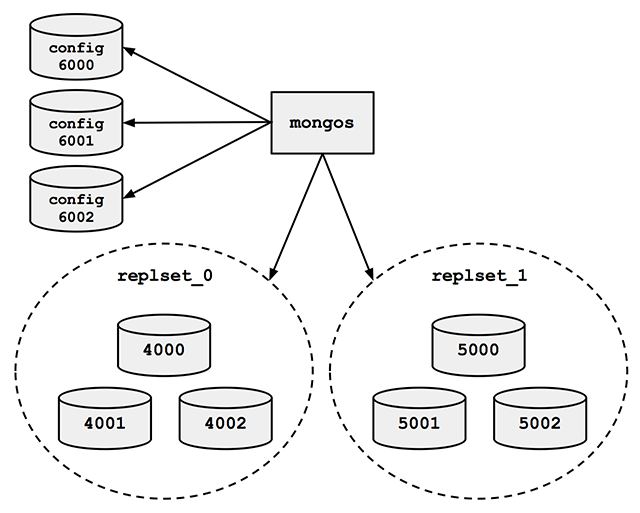

Run the cluster_setup.py script in my repository. It sets up a standard sharded cluster for you running on your local machine. There’s a mongos, three config servers, and two shards, each of which is a three-member replica set. The first shard’s replica set is running on ports 4000 through 4002, the second shard is on ports 5000 through 5002, and the three config servers are on ports 6000 through 6002:

For the finale, cluster_setup.py makes a collection named sharded_collection, sharded on a key named shard_key.

In a normal deployment, we’d let MongoDB’s balancer automatically distribute chunks of data among our two shards. But for this demo we want documents to be on predictable shards, so my script disables the balancer. It makes a chunk for all documents with shard_key less than 500 and another chunk for documents with shard_key greater than or equal to 500. It moves the high chunk to replset_1:

client = MongoClient() # Connect to mongos. admin = client.admin # admin database.

Pre-split.

admin.command(

'split', 'test.sharded_collection',

middle={'shard_key': 500})

admin.command(

'moveChunk', 'test.sharded_collection',

find={'shard_key': 500},

to='replset_1')If you connect to mongos with the MongoDB shell, sh.status() shows there’s one chunk on each of the two shards:

{ "shard_key" : { "$minKey" : 1 } } -->> { "shard_key" : 500 } on : replset_0 { "t" : 2, "i" : 1 }

{ "shard_key" : 500 } -->> { "shard_key" : { "$maxKey" : 1 } } on : replset_1 { "t" : 2, "i" : 0 }The setup script also inserts a document with a shard_key of 0 and another with a shard_key of 500. Now we’re ready for some profiling.

Profiling

Run the tail_profile.py script from my repository. It connects to all the replica set members. On each, it sets the profiling level to 2 (“log everything”) on the test database, and creates a tailable cursor on the system.profile collection. The script filters out some noise in the profile collection—for example, the activities of the tailable cursor show up in the system.profile collection that it’s tailing. Any legitimate entries in the profile are spat out to the console in pretty colors.

Experiments

Targeted queries versus scatter-gather

Let’s run a query from Python in a separate terminal:

>>> from pymongo import MongoClient

>>> # Connect to mongos.

>>> collection = MongoClient().test.sharded_collection

>>> collection.find_one({'shard_key': 0})

{'_id': ObjectId('51bb6f1cca1ce958c89b348a'), 'shard_key': 0}

tail_profile.py prints:

replset_0 primary on 4000: query test.sharded_collection {“shard_key”: 0}

The query includes the shard key, so mongos reads from the shard that can satisfy it. Adding shards can scale out your throughput on a query like this. What about a query that doesn’t contain the shard key?:

>>> collection.find_one({})mongos sends the query to both shards:

replset_0 primary on 4000: query test.sharded_collection {“shard_key”: 0}

replset_1 primary on 5000: query test.sharded_collection {“shard_key”: 500}

For fan-out queries like this, adding more shards won’t scale out your query throughput as well as it would for targeted queries, because every shard has to process every query. But we can scale throughput on queries like these by reading from secondaries.

Queries with read preferences

We can use read preferences to read from secondaries:

>>> from pymongo.read_preferences import ReadPreference

>>> collection.find_one({}, read_preference=ReadPreference.SECONDARY)tail_profile.py shows us that mongos chose a random secondary from each shard:

replset_0 secondary on 4001: query test.sharded_collection {“$readPreference”: {“mode”: “secondary”}, “$query”: {}}

replset_1 secondary on 5001: query test.sharded_collection {“$readPreference”: {“mode”: “secondary”}, “$query”: {}}

Note how PyMongo passes the read preference to mongos in the query, as the $readPreference field. mongos targets one secondary in each of the two replica sets.

Updates

With a sharded collection, updates must either include the shard key or be “multi-updates”. An update with the shard key goes to the proper shard, of course:

>>> collection.update({'shard_key': -100}, {'$set': {'field': 'value'}})replset_0 primary on 4000: update test.sharded_collection {“shard_key”: -100}

mongos only sends the update to replset_0, because we put the chunk of documents with shard_key less than 500 there.

A multi-update hits all shards:

>>> collection.update({}, {'$set': {'field': 'value'}}, multi=True)replset_0 primary on 4000: update test.sharded_collection {}

replset_1 primary on 5000: update test.sharded_collection {}

A multi-update on a range of the shard key need only involve the proper shard:

>>> collection.update({'shard_key': {'$gt': 1000}}, {'$set': {'field': 'value'}}, multi=True)replset_1 primary on 5000: update test.sharded_collection {“shard_key”: {“$gt”: 1000}}

So targeted updates that include the shard key can be scaled out by adding shards. Even multi-updates can be scaled out if they include a range of the shard key, but multi-updates without the shard key won’t benefit from extra shards.

Commands

In version 2.4, mongos can use secondaries not only for queries, but also for some commands. You can run count on secondaries if you pass the right read preference:

>>> cursor = collection.find(read_preference=ReadPreference.SECONDARY) >>> cursor.count()

replset_0 secondary on 4001: command count: sharded_collection

replset_1 secondary on 5001: command count: sharded_collection

Whereas findAndModify, since it modifies data, is run on the primaries no matter your read preference:

>>> db = MongoClient().test

>>> test.command(

... 'findAndModify',

... 'sharded_collection',

... query={'shard_key': -1},

... remove=True,

... read_preference=ReadPreference.SECONDARY)replset_0 primary on 4000: command findAndModify: sharded_collection

Go Forth And Scale

To scale a sharded cluster, you should understand how operations are distributed: are they scatter-gather, or targeted to one shard? Do they run on primaries or secondaries? If you set up a cluster and test your queries interactively like we did here, you can see how your cluster behaves in practice, and design your application for future growth.

Read Jesse’s blog, Emptysquare and follow him on Github

原文地址:Real-time Profiling a MongoDB Cluster, 感谢原作者分享。

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

AI Hentai Generator

免费生成ai无尽的。

热门文章

热工具

记事本++7.3.1

好用且免费的代码编辑器

SublimeText3汉化版

中文版,非常好用

禅工作室 13.0.1

功能强大的PHP集成开发环境

Dreamweaver CS6

视觉化网页开发工具

SublimeText3 Mac版

神级代码编辑软件(SublimeText3)

热门话题

navicat过期怎么办

Apr 23, 2024 pm 12:12 PM

navicat过期怎么办

Apr 23, 2024 pm 12:12 PM

解决 Navicat 过期问题的方法包括:续订许可证;卸载并重新安装;禁用自动更新;使用 Navicat Premium Essentials 免费版;联系 Navicat 客户支持。

navicat怎么连mongodb

Apr 24, 2024 am 11:27 AM

navicat怎么连mongodb

Apr 24, 2024 am 11:27 AM

要使用 Navicat 连接 MongoDB,您需要:安装 Navicat创建 MongoDB 连接:a. 输入连接名称、主机地址和端口b. 输入认证信息(如果需要)添加 SSL 证书(如果需要)验证连接保存连接

net4.0有什么用

May 10, 2024 am 01:09 AM

net4.0有什么用

May 10, 2024 am 01:09 AM

.NET 4.0 用于创建各种应用程序,它为应用程序开发人员提供了丰富的功能,包括:面向对象编程、灵活性、强大的架构、云计算集成、性能优化、广泛的库、安全性、可扩展性、数据访问和移动开发支持。

无服务器架构中Java函数与数据库的集成

Apr 28, 2024 am 08:57 AM

无服务器架构中Java函数与数据库的集成

Apr 28, 2024 am 08:57 AM

在无服务器架构中,Java函数可以与数据库集成,以访问和操作数据库中的数据。关键步骤包括:创建Java函数、配置环境变量、部署函数和测试函数。通过遵循这些步骤,开发人员可以构建复杂的应用程序,无缝访问存储在数据库中的数据。

如何在Debian上配置MongoDB自动扩容

Apr 02, 2025 am 07:36 AM

如何在Debian上配置MongoDB自动扩容

Apr 02, 2025 am 07:36 AM

本文介绍如何在Debian系统上配置MongoDB实现自动扩容,主要步骤包括MongoDB副本集的设置和磁盘空间监控。一、MongoDB安装首先,确保已在Debian系统上安装MongoDB。使用以下命令安装:sudoaptupdatesudoaptinstall-ymongodb-org二、配置MongoDB副本集MongoDB副本集确保高可用性和数据冗余,是实现自动扩容的基础。启动MongoDB服务:sudosystemctlstartmongodsudosys

MongoDB在Debian上的高可用性如何保障

Apr 02, 2025 am 07:21 AM

MongoDB在Debian上的高可用性如何保障

Apr 02, 2025 am 07:21 AM

本文介绍如何在Debian系统上构建高可用性的MongoDB数据库。我们将探讨多种方法,确保数据安全和服务持续运行。关键策略:副本集(ReplicaSet):利用副本集实现数据冗余和自动故障转移。当主节点出现故障时,副本集会自动选举新的主节点,保证服务的持续可用性。数据备份与恢复:定期使用mongodump命令进行数据库备份,并制定有效的恢复策略,以应对数据丢失风险。监控与报警:部署监控工具(如Prometheus、Grafana)实时监控MongoDB的运行状态,并

nodejs如何连接数据库

Apr 21, 2024 am 06:16 AM

nodejs如何连接数据库

Apr 21, 2024 am 06:16 AM

连接到数据库,Node.js 提供了 MySQL、PostgreSQL、MongoDB 和 Redis 等多种数据库连接器包。连接步骤包括:1. 安装相应的连接器包;2. 创建连接池维护可重用连接;3. 建立与数据库的连接。注意:操作为异步,需处理错误,保证安全性,优化性能。

navicat能连接mongodb吗

Apr 23, 2024 pm 05:15 PM

navicat能连接mongodb吗

Apr 23, 2024 pm 05:15 PM

是的,Navicat 可以连接到 MongoDB 数据库。具体步骤包括:打开 Navicat 并创建新的连接。选择数据库类型为 MongoDB。输入 MongoDB 主机地址、端口和数据库名称。输入 MongoDB 用户名和密码(如果需要)。单击“连接”按钮。