如果您还没有听说过,Python 循环可能会很慢——尤其是在处理大型数据集时。如果您尝试跨数百万个数据点进行计算,执行时间很快就会成为瓶颈。幸运的是,Numba 有一个即时 (JIT) 编译器,我们可以用它来帮助加速 Python 中的数值计算和循环。

前几天,我发现自己需要一个简单的 Python 指数平滑函数。该函数需要接受数组并返回一个具有平滑值的相同长度的数组。通常,我会尝试在 Python 中尽可能避免循环(尤其是在处理 Pandas DataFrame 时)。以我目前的能力水平,我不知道如何避免使用循环以指数方式平滑值数组。

我将逐步介绍创建此指数平滑函数的过程,并在使用和不使用 JIT 编译的情况下对其进行测试。我将简要介绍 JIT 以及如何确保以适用于 nopython 模式的方式对循环进行编码。

JIT 编译器对于 Python、JavaScript 和 Java 等高级语言特别有用。这些语言以其灵活性和易用性而闻名,但与 C 或 C++ 等较低级语言相比,它们的执行速度可能较慢。 JIT 编译通过优化运行时代码的执行来帮助弥补这一差距,使其更快,而不会牺牲这些高级语言的优势。

在 Numba JIT 编译器中使用 nopython=True 模式时,Python 解释器将被完全绕过,迫使 Numba 将所有内容编译为机器代码。通过消除与 Python 动态类型和其他解释器相关操作相关的开销,可以实现更快的执行速度。

指数平滑是一种通过对过去的观察值应用加权平均值来平滑数据的技术。指数平滑的公式为:

地点:

该公式应用指数平滑,其中:

为了在 Python 中实现这一点,并坚持使用 nopython=True 模式的功能,我们将传入一个数据值数组和 alpha 浮点数。我将 alpha 默认设置为 0.33333333,因为这适合我当前的用例。我们将初始化一个空数组来存储平滑后的值,循环并计算并返回平滑后的值。这是它的样子:

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] + (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

简单吧?让我们看看 JIT 现在是否在做任何事情。首先,我们需要创建一个大的整数数组。然后,我们调用该函数,计算计算所需的时间,并打印结果。

# Generate a large random array of a million integers

large_array = np.random.randint(1, 100, size=1_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

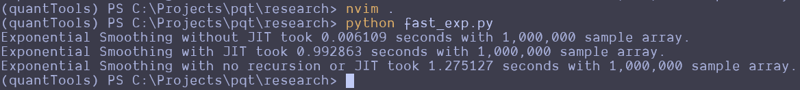

This can be repeated and altered just a bit to test the function without the JIT decorator. Here are the results that I got:

Wait, what the f***?

I thought JIT was supposed to speed it up. It looks like the standard Python function beat the JIT version and a version that attempts to use no recursion. That's strange. I guess you can't just slap the JIT decorator on something and make it go faster? Perhaps simple array loops and NumPy operations are already pretty efficient? Perhaps I don't understand the use case for JIT as well as I should? Maybe we should try this on a more complex loop?

Here is the entire code python file I created for testing:

import numpy as np

from numba import jit

import time

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] + (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def fast_exponential_smoothing_nojit(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] + (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def non_recursive_exponential_smoothing(values, alpha=0.33333333):

n = len(values)

smoothed_values = np.zeros(n)

# Initialize the first value

smoothed_values[0] = values[0]

# Calculate the rest of the smoothed values

decay_factors = (1 - alpha) ** np.arange(1, n)

cumulative_weights = alpha * decay_factors

smoothed_values[1:] = np.cumsum(values[1:] * np.flip(cumulative_weights)) + (1 - alpha) ** np.arange(1, n) * values[0]

return smoothed_values

# Generate a large random array of a million integers

large_array = np.random.randint(1, 1000, size=10_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing_nojit(large_array)

end_time = time.time()

print(f"Exponential Smoothing without JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = non_recursive_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with no recursion or JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

I attempted to create the non-recursive version to see if vectorized operations across arrays would make it go faster, but it seems to be pretty damn fast as it is. These results remained the same all the way up until I didn't have enough memory to make the array of random integers.

Let me know what you think about this in the comments. I am by no means a professional developer, so I am accepting all comments, criticisms, or educational opportunities.

Until next time.

Happy coding!

以上是使用 JIT 编译器让我的 Python 循环变慢?的详细内容。更多信息请关注PHP中文网其他相关文章!