培训语言模型在Google Colab上

>微调大语模型(LLMS),例如Bert,Llama,Bart,以及Mistral AI和其他人的 该解决方案涉及使用Google驱动器存储中间结果和模型检查点。 这可以确保您的工作仍然存在,即使在Colab环境重置之后。 您需要一个具有足够驱动空间的Google帐户。 在驱动器中创建两个文件夹:“数据”(用于培训数据集)和“检查点”(用于存储模型检查点)。 >在COLAB中安装Google Drive: 首先使用此命令将Google Drive安装在Colab笔记本中:

>

解决方案的核心在于创建功能以保存和加载模型检查点。 这些功能将序列您的模型的状态,优化器,调度程序和其他相关信息。

保存检查点函数:

>

将这些功能集成到您的培训循环中。循环在开始培训之前应检查现有检查点。如果找到了检查站,它将恢复从保存的时期进行的培训。>

即使Colab会话终止,这种结构也可以无缝恢复训练。 请记住要调整 )。from google.colab import drive

drive.mount('/content/drive')!ls /content/drive/MyDrive/data

!ls /content/drive/MyDrive/checkpoints

import torch

import os

def save_checkpoint(epoch, model, optimizer, scheduler, loss, model_name, overwrite=True):

checkpoint = {

'epoch': epoch,

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'scheduler_state_dict': scheduler.state_dict(),

'loss': loss

}

direc = get_checkpoint_dir(model_name) #Assumed function to construct directory path

if overwrite:

file_path = os.path.join(direc, 'checkpoint.pth')

else:

file_path = os.path.join(direc, f'epoch_{epoch}_checkpoint.pth')

os.makedirs(direc, exist_ok=True) # Create directory if it doesn't exist

torch.save(checkpoint, file_path)

print(f"Checkpoint saved at epoch {epoch}")

#Example get_checkpoint_dir function (adapt to your needs)

def get_checkpoint_dir(model_name):

return os.path.join("/content/drive/MyDrive/checkpoints", model_name)

import torch

import os

def load_checkpoint(model_name, model, optimizer, scheduler):

direc = get_checkpoint_dir(model_name)

if os.path.exists(direc):

#Find checkpoint with highest epoch (adapt to your naming convention)

checkpoints = [f for f in os.listdir(direc) if f.endswith('.pth')]

if checkpoints:

latest_checkpoint = max(checkpoints, key=lambda x: int(x.split('_')[-2]) if '_' in x else 0)

file_path = os.path.join(direc, latest_checkpoint)

checkpoint = torch.load(file_path, map_location=torch.device('cpu'))

model.load_state_dict(checkpoint['model_state_dict'])

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

scheduler.load_state_dict(checkpoint['scheduler_state_dict'])

epoch = checkpoint['epoch']

loss = checkpoint['loss']

print(f"Checkpoint loaded from epoch {epoch}")

return epoch, loss

else:

print("No checkpoints found in directory.")

return 0, None

else:

print(f"No checkpoint directory found for {model_name}, starting from epoch 1.")

return 0, None

EPOCHS = 10

for exp in experiments: # Assuming 'experiments' is a list of your experiment configurations

model, optimizer, scheduler = initialise_model_components(exp) # Your model initialization function

train_loader, val_loader = generate_data_loaders(exp) # Your data loader function

start_epoch, prev_loss = load_checkpoint(exp, model, optimizer, scheduler)

for epoch in range(start_epoch, EPOCHS):

print(f'Epoch {epoch + 1}/{EPOCHS}')

# YOUR TRAINING CODE HERE... (training loop)

save_checkpoint(epoch + 1, model, optimizer, scheduler, train_loss, exp) #Save after each epoch

以上是培训语言模型在Google Colab上的详细内容。更多信息请关注PHP中文网其他相关文章!

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

Video Face Swap

使用我们完全免费的人工智能换脸工具轻松在任何视频中换脸!

热门文章

热工具

记事本++7.3.1

好用且免费的代码编辑器

SublimeText3汉化版

中文版,非常好用

禅工作室 13.0.1

功能强大的PHP集成开发环境

Dreamweaver CS6

视觉化网页开发工具

SublimeText3 Mac版

神级代码编辑软件(SublimeText3)

如何使用AGNO框架构建多模式AI代理?

Apr 23, 2025 am 11:30 AM

如何使用AGNO框架构建多模式AI代理?

Apr 23, 2025 am 11:30 AM

在从事代理AI时,开发人员经常发现自己在速度,灵活性和资源效率之间进行权衡。我一直在探索代理AI框架,并遇到了Agno(以前是Phi-

OpenAI以GPT-4.1的重点转移,将编码和成本效率优先考虑

Apr 16, 2025 am 11:37 AM

OpenAI以GPT-4.1的重点转移,将编码和成本效率优先考虑

Apr 16, 2025 am 11:37 AM

该版本包括三种不同的型号,GPT-4.1,GPT-4.1 MINI和GPT-4.1 NANO,标志着向大语言模型景观内的特定任务优化迈进。这些模型并未立即替换诸如

如何在SQL中添加列? - 分析Vidhya

Apr 17, 2025 am 11:43 AM

如何在SQL中添加列? - 分析Vidhya

Apr 17, 2025 am 11:43 AM

SQL的Alter表语句:动态地将列添加到数据库 在数据管理中,SQL的适应性至关重要。 需要即时调整数据库结构吗? Alter表语句是您的解决方案。本指南的详细信息添加了Colu

Andrew Ng的新简短课程

Apr 15, 2025 am 11:32 AM

Andrew Ng的新简短课程

Apr 15, 2025 am 11:32 AM

解锁嵌入模型的力量:深入研究安德鲁·NG的新课程 想象一个未来,机器可以完全准确地理解和回答您的问题。 这不是科幻小说;多亏了AI的进步,它已成为R

火箭发射模拟和分析使用Rocketpy -Analytics Vidhya

Apr 19, 2025 am 11:12 AM

火箭发射模拟和分析使用Rocketpy -Analytics Vidhya

Apr 19, 2025 am 11:12 AM

模拟火箭发射的火箭发射:综合指南 本文指导您使用强大的Python库Rocketpy模拟高功率火箭发射。 我们将介绍从定义火箭组件到分析模拟的所有内容

Google揭示了下一个2025年云上最全面的代理策略

Apr 15, 2025 am 11:14 AM

Google揭示了下一个2025年云上最全面的代理策略

Apr 15, 2025 am 11:14 AM

双子座是Google AI策略的基础 双子座是Google AI代理策略的基石,它利用其先进的多模式功能来处理和生成跨文本,图像,音频,视频和代码的响应。由DeepM开发

您可以自己3D打印的开源人形机器人:拥抱面孔购买花粉机器人技术

Apr 15, 2025 am 11:25 AM

您可以自己3D打印的开源人形机器人:拥抱面孔购买花粉机器人技术

Apr 15, 2025 am 11:25 AM

“超级乐于宣布,我们正在购买花粉机器人,以将开源机器人带到世界上,” Hugging Face在X上说:“自从Remi Cadene加入Tesla以来,我们已成为开放机器人的最广泛使用的软件平台。

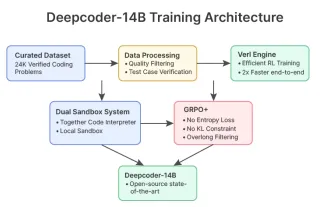

DeepCoder-14b:O3-Mini和O1的开源竞赛

Apr 26, 2025 am 09:07 AM

DeepCoder-14b:O3-Mini和O1的开源竞赛

Apr 26, 2025 am 09:07 AM

在AI社区的重大发展中,Agentica和AI共同发布了一个名为DeepCoder-14B的开源AI编码模型。与OpenAI等封闭源竞争对手提供代码生成功能