使用innobackupex基于从库搭建mysql主从架构

MySQL的主从搭建大家有很多种方式,传统的mysqldump方式是很多人的选择之一。但对于较大的数据库则该方式并非理想的选择。使用Xtrabackup可以快速轻松的构建或修复mysql主从架构。本文描述了基于现有的从库来快速搭建主从,即作为原主库的一个新从库。该方式的好处是对主库无需备份期间导致的相关性能压力。搭建过程中使用了快速流备份方式来加速主从构建以及描述了加速流式备份的几个参数,供大家参考。

有关流式备份可以参考:Xtrabackup 流备份与恢复

1、备份从库

###远程备份期间使用了等效性验证,因此应先作相应配置,这里我们使用的是mysql用户

$ innobackupex --user=root --password=xxx --slave-info --safe-slave-backup \--compress-threads=3 --parallel=3 --stream=xbstream \--compress /log | ssh -p50021 mysql@172.16.16.10 "xbstream -x -C /log/recover"

###备份期间使用了safe-slave-backup参数,可以看到SQL thread被停止,完成后被启动

$ mysql -uroot -p -e "show slave status \G"|egrep 'Slave_IO_Running|Slave_SQL_Running'

Enter password:

Slave_IO_Running: Yes

Slave_SQL_Running: No

Slave_SQL_Running_State: Slave has read all relay log; waiting for the slave I/O thread to update it###复制my.cnf文件到新从库

$ scp -P50021 /etc/my.cnf mysql@172.16.16.10:/log/recover

2、主库授予新从库复制账户

master@MySQL> grant replication slave,replication client on *.* to repl@'172.16.%.%' identified by 'repl';

3、新从库prepare

###由于使用了流式压缩备份,因此需要先解压

###下载地址 http://www.php.cn/

# tar -xvf qpress-11-linux-x64.tar qpress# cp qpress /usr/bin/ $ innobackupex --decompress /log/recover ###解压$ innobackupex --apply-log --use-memory=2G /log/recover ###prepare备份

4、准备从库配置文件my.cnf

###根据需要修改相应参数,这里的修改如下,

skip-slave-start datadir = /log/recover port = 3307 server_id = 24 socket = /tmp/mysql3307.sock pid-file=/log/recover/mysql3307.pid log_error=/log/recover/recover.err

5、启动从库及修改change master

# chown -R mysql:mysql /log/recover

# /app/soft/mysql/bin/mysqld_safe --defaults-file=/log/recover/my.cnf &

mysql> system more /log/recover/xtrabackup_slave_info

CHANGE MASTER TO MASTER_LOG_FILE='mysql-bin.000658', MASTER_LOG_POS=925384099

mysql> CHANGE MASTER TO

-> MASTER_HOST='172.16.16.10',

### Author: Leshami

-> MASTER_USER='repl',

### Blog :

http://www.php.cn/

-> MASTER_PASSWORD='repl',

-> MASTER_PORT=3306,

-> MASTER_LOG_FILE='mysql-bin.000658',

-> MASTER_LOG_POS=925384099;

Query OK, 0 rows affected, 2 warnings (0.31 sec)mysql> start slave;

Query OK, 0 rows affected (0.02 sec)

6、基于从库备份相关参数及加速流备份参数

The --slave-info option This option is useful when backing up a replication slave server. It prints the binary log position and name of the master server. It also writes this information to the xtrabackup_slave_info file as a CHANGE MASTER statement. This is useful for setting up a new slave for this master can be set up by starting a slave server on this backup and issuing the statement saved in the xtrabackup_slave_info file.

The --safe-slave-backup option In order to assure a consistent replication state, this option stops the slave SQL thread and wait to start backing up until Slave_open_temp_tables in SHOW STATUS is zero. If there are no open temporary tables, the backup will take place, otherwise the SQL thread will be started and stopped until there are no open temporary tables. The backup will fail if Slave_open_temp_tables does not become zero after --safe-slave-backup-timeout seconds (defaults to 300 seconds). The slave SQL thread will be restarted when the backup finishes. Using this option is always recommended when taking backups from a slave server.

Warning: Make sure your slave is a true replica of the master before using it as a source for backup. A good tool

to validate a slave is pt-table-checksum.

--compress

This option instructs xtrabackup to compress backup copies of InnoDB

data files. It is passed directly to the xtrabackup child process. ###注compress方式是一种相对粗糙的压缩方式,压缩为.gp文件,没有gzip压缩比高

--compress-threads

This option specifies the number of worker threads that will be used

for parallel compression. It is passed directly to the xtrabackup

child process. Try 'xtrabackup --help' for more details.--decompress

Decompresses all files with the .qp extension in a backup previously

made with the --compress option. --parallel=NUMBER-OF-THREADS

On backup, this option specifies the number of threads the

xtrabackup child process should use to back up files concurrently.

The option accepts an integer argument. It is passed directly to

xtrabackup's --parallel option. See the xtrabackup documentation for

details.

On --decrypt or --decompress it specifies the number of parallel

forks that should be used to process the backup files.以上就是使用innobackupex基于从库搭建mysql主从架构的内容,更多相关内容请关注PHP中文网(www.php.cn)!

热AI工具

Undresser.AI Undress

人工智能驱动的应用程序,用于创建逼真的裸体照片

AI Clothes Remover

用于从照片中去除衣服的在线人工智能工具。

Undress AI Tool

免费脱衣服图片

Clothoff.io

AI脱衣机

AI Hentai Generator

免费生成ai无尽的。

热门文章

热工具

记事本++7.3.1

好用且免费的代码编辑器

SublimeText3汉化版

中文版,非常好用

禅工作室 13.0.1

功能强大的PHP集成开发环境

Dreamweaver CS6

视觉化网页开发工具

SublimeText3 Mac版

神级代码编辑软件(SublimeText3)

热门话题

mysql用户和数据库的关系

Apr 08, 2025 pm 07:15 PM

mysql用户和数据库的关系

Apr 08, 2025 pm 07:15 PM

MySQL 数据库中,用户和数据库的关系通过权限和表定义。用户拥有用户名和密码,用于访问数据库。权限通过 GRANT 命令授予,而表由 CREATE TABLE 命令创建。要建立用户和数据库之间的关系,需创建数据库、创建用户,然后授予权限。

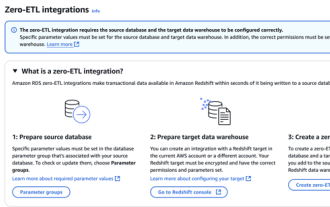

RDS MySQL 与 Redshift 零 ETL 集成

Apr 08, 2025 pm 07:06 PM

RDS MySQL 与 Redshift 零 ETL 集成

Apr 08, 2025 pm 07:06 PM

数据集成简化:AmazonRDSMySQL与Redshift的零ETL集成高效的数据集成是数据驱动型组织的核心。传统的ETL(提取、转换、加载)流程复杂且耗时,尤其是在将数据库(例如AmazonRDSMySQL)与数据仓库(例如Redshift)集成时。然而,AWS提供的零ETL集成方案彻底改变了这一现状,为从RDSMySQL到Redshift的数据迁移提供了简化、近乎实时的解决方案。本文将深入探讨RDSMySQL零ETL与Redshift集成,阐述其工作原理以及为数据工程师和开发者带来的优势。

mysql 是否要付费

Apr 08, 2025 pm 05:36 PM

mysql 是否要付费

Apr 08, 2025 pm 05:36 PM

MySQL 有免费的社区版和收费的企业版。社区版可免费使用和修改,但支持有限,适合稳定性要求不高、技术能力强的应用。企业版提供全面商业支持,适合需要稳定可靠、高性能数据库且愿意为支持买单的应用。选择版本时考虑的因素包括应用关键性、预算和技术技能。没有完美的选项,只有最合适的方案,需根据具体情况谨慎选择。

MySQL 中的查询优化对于提高数据库性能至关重要,尤其是在处理大型数据集时

Apr 08, 2025 pm 07:12 PM

MySQL 中的查询优化对于提高数据库性能至关重要,尤其是在处理大型数据集时

Apr 08, 2025 pm 07:12 PM

1.使用正确的索引索引通过减少扫描的数据量来加速数据检索select*fromemployeeswherelast_name='smith';如果多次查询表的某一列,则为该列创建索引如果您或您的应用根据条件需要来自多个列的数据,则创建复合索引2.避免选择*仅选择那些需要的列,如果您选择所有不需要的列,这只会消耗更多的服务器内存并导致服务器在高负载或频率时间下变慢例如,您的表包含诸如created_at和updated_at以及时间戳之类的列,然后避免选择*,因为它们在正常情况下不需要低效查询se

mysql用户名和密码怎么填

Apr 08, 2025 pm 07:09 PM

mysql用户名和密码怎么填

Apr 08, 2025 pm 07:09 PM

要填写 MySQL 用户名和密码,请:1. 确定用户名和密码;2. 连接到数据库;3. 使用用户名和密码执行查询和命令。

如何针对高负载应用程序优化 MySQL 性能?

Apr 08, 2025 pm 06:03 PM

如何针对高负载应用程序优化 MySQL 性能?

Apr 08, 2025 pm 06:03 PM

MySQL数据库性能优化指南在资源密集型应用中,MySQL数据库扮演着至关重要的角色,负责管理海量事务。然而,随着应用规模的扩大,数据库性能瓶颈往往成为制约因素。本文将探讨一系列行之有效的MySQL性能优化策略,确保您的应用在高负载下依然保持高效响应。我们将结合实际案例,深入讲解索引、查询优化、数据库设计以及缓存等关键技术。1.数据库架构设计优化合理的数据库架构是MySQL性能优化的基石。以下是一些核心原则:选择合适的数据类型选择最小的、符合需求的数据类型,既能节省存储空间,又能提升数据处理速度

mysql怎么复制粘贴

Apr 08, 2025 pm 07:18 PM

mysql怎么复制粘贴

Apr 08, 2025 pm 07:18 PM

MySQL 中的复制粘贴包含以下步骤:选择数据,使用 Ctrl C(Windows)或 Cmd C(Mac)复制;在目标位置右键单击,选择“粘贴”或使用 Ctrl V(Windows)或 Cmd V(Mac);复制的数据将插入到目标位置,或替换现有数据(取决于目标位置是否已存在数据)。

了解 ACID 属性:可靠数据库的支柱

Apr 08, 2025 pm 06:33 PM

了解 ACID 属性:可靠数据库的支柱

Apr 08, 2025 pm 06:33 PM

数据库ACID属性详解ACID属性是确保数据库事务可靠性和一致性的一组规则。它们规定了数据库系统处理事务的方式,即使在系统崩溃、电源中断或多用户并发访问的情况下,也能保证数据的完整性和准确性。ACID属性概述原子性(Atomicity):事务被视为一个不可分割的单元。任何部分失败,整个事务回滚,数据库不保留任何更改。例如,银行转账,如果从一个账户扣款但未向另一个账户加款,则整个操作撤销。begintransaction;updateaccountssetbalance=balance-100wh